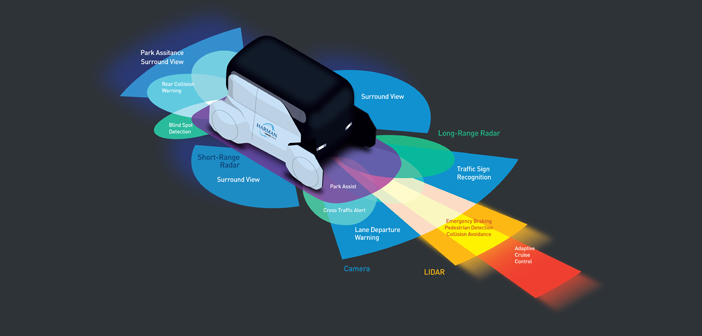

With safety paramount, Harman predicts that a three-way approach to sensors will be necessary for ADAS and autonomous driving.

Defining the right sensors for advanced driver assistance systems (ADAS) and higher levels of autonomy remains one area of automotive development where opinions are divided. For some OEMs, cameras and radar systems will be sufficient to enable automated driving features but for others, there is a sense that they don’t provide robust enough data for a vehicle to make decisions on its own.

Under the radar and behind the lens

Radar is the most prevalent of detection sensors in the market right now. To date, automotive-grade radar systems have tended to deliver their information selectively, and with a limited detection resolution. Radar can also be disrupted by similar sensors and isn’t immune to making mistakes when it comes to recognizing objects.

Cameras have their own limits too; their performance is affected by light conditions and can be compromised by inclement weather. They tend not to be robust enough to provide detailed information for autonomy either. That’s why Harman believes that lidar will be a crucial element for successful deployment of Level 2+ autonomy.

Why lidar holds an advantage

Lidar (Light Detection and Ranging) uses light pulses from a laser source to scan the environment. To date, lidar has been too expensive – often running to thousands of euros for the componentry – to roll out as a commodity installation. Historically it has been prone to errors, too, for example in snow or for detecting objects that are very close.

We can expect that to change with the next generation, using solid-state, set to be available in 2020. Lidar should be considered as a third sensor technology designed to augment, not replace, radar and camera sensors. It’s worth noting that none of the sensors – radar, camera or lidar – can cover every environmental situation and having all three will provide the most powerful solution.

Immunity to interference

With higher resolution, now 0.1° x 0.1°, up to distances of 200m, lidar can offer a high-precision 3D image of the vehicle surrounding that can be comprehensively processed by ADAS. Its immunity to interference from sensors of the same type and its ability to perform completely independently of daylight and ambient light levels offer advantages over cameras and radar. Its accuracy and detail, for example being able to see which way a pedestrian is facing, will be relevant in urban environments where objects and hazards can be much closer and appear at a more rapid pace.

Solid-state lidar development

The breakthrough for lidar is the transition from mechanical to solid-state. Current mechanical systems in automotive applications are bulky and can be prone to vibration and ingress. The next generation, solid-state lidar systems will offer the same performance but in a smaller package and significantly, are up to 80% cheaper. Harman believes that, together with a camera and radar, a single, forward-facing, high-resolution lidar with a long range – say 50-200m –will be enough for cars that still retain a human driver. Robotaxis, however, would need long-range lidar at both the front and rear, and two additional short range (up to 20m) lidar systems on each side of the vehicle.

Combination challenge

For the utmost safety, it will be crucial that all three data sources be combined to create the most comprehensive view of the environment. External data from the cloud and V2X infrastructure will aid in creating this comprehensive picture, too. Sensor fusion is therefore key for robust system development in the field of automated driving.

Processing this data in the most efficient way will put pressure on hardware in the vehicle. Distributed architectures with multiple ECUs will not be as efficient and we predict that a core, centralized architecture will be necessary in the future. For this to succeed, it will be imperative that the hardware and software provided by different suppliers be seamless, reinforcing the need for comprehensive system integrators at Tier 1 and OEM level. Such an architecture enables parallel processing of sensor data alongside the processing of ‘regions of interest’, which will ultimately improve detection rates by these systems.

Solid-state set for 2021

With solid-state Lidar systems offering crucial benefits, their roll-out into production cars is, unsurprisingly, not too far away. Harman partner Innoviz, with its solid-state MEMS-based lidar solution InnovizOne, is planned to be the first solid-state lidar on a production car. It is planned for the first generation of autonomous BMW vehicles scheduled for 2021. Together with an expected surge in applications for robotaxis soon after, the road ahead for lidar is extremely bright.

By Robert Kempf, vice president Sales and Business Development for ADAS/Autonomous Driving at Harman International

By Robert Kempf, vice president Sales and Business Development for ADAS/Autonomous Driving at Harman International