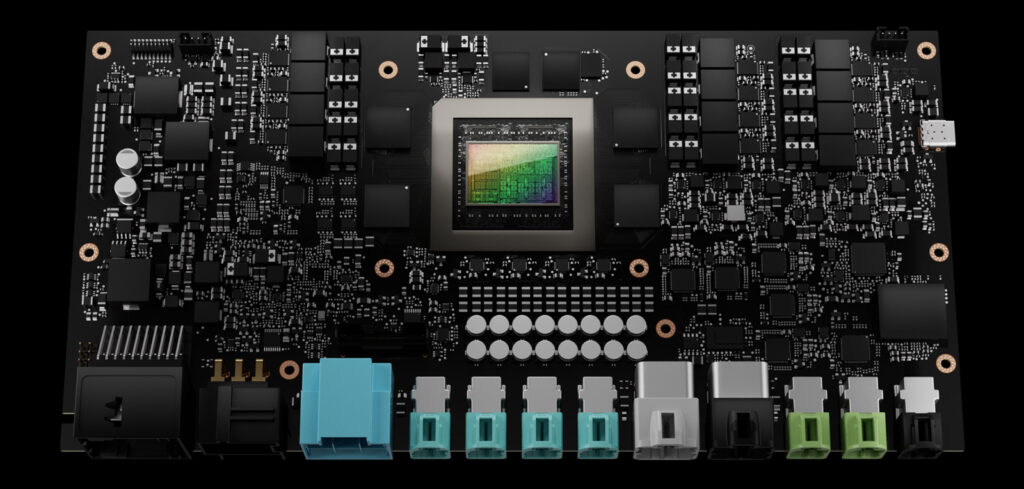

Announced as part of its autumn keynote, Nvidia has released details of Drive Thor, the company’s next-generation centralized computer which will enable autonomous vehicles to be safer and more secure.

Nvidia’s Drive Thor is capable of achieving up to 2,000 teraflops of performance and can consolidate intelligent functions such as automated and assisted driving, parking, driver and occupant monitoring technologies into one architecture. This results in greater efficiency and reduced costs for entire systems.

The superchip benefits from artificial intelligence capabilities which were first introduced in the Nvidia Hopper Multi-Instance GPU architecture, along with the Nvidia Grace CPU and Nvidia Ada Lovelace GPU. MIG support for graphics and compute enables IVI and advanced driver assistance systems to run domain isolation, while other time-sensitive processes can also be running completely uninterrupted in tandem.

“Advances in accelerated computing and AI are moving at lightspeed,” said Jensen Huang, founder and CEO, Nvidia. “Drive Thor is the superhero of centralized compute, with lightning-fast performance to deliver continuously upgradable, safe and secure software-defined supercomputers on wheels.”

Geely-owned EV maker Zeekr has selected Drive Thor as the brain of its future lineup of intelligent electric vehicles, with the start of production expected in early 2025.

“Zeekr users demand a luxury experience that includes the latest technology and safety features,” explained An Conghui, CEO, Zeekr. “Nvidia Drive Thor will support our mission of providing cutting-edge technology that fulfills the needs of our customers and ensures Zeekr remains at the forefront of tomorrow’s innovations.”

The superchip can support multi-domain computing, isolating functions for automated driving and IVI. To negate the need for multiple electric control units, manufacturers can use Drive Thor to consolidate multiple functions on a single system-on-a-chip (SoC).

Stated to be the first AV platform to incorporate an inference transformer engine – a new component of the Tensor Cores within Nvidia GPUs – Drive Thor is capable of accelerating inference performance of transformer deep neural networks by up to nine times. This is crucial for supporting AI workloads need for self-driving vehicles.

Other capabilities of Drive Thor include the superchip’s 8-bit floating point (FP8). Often, neural-network accuracy is lost when moving from 32-bit FP data to 8-bit integer format, however, with 2,000 teraflops of FP8 precision, the transition to 8-bit can be made without accuracy being lost. Drive Thor also uses NVLink-C2C chip interconnect technology, while running multiple operating systems. NVLink-C2C can share, schedule and distribute work across the link with minimal overhead. This is turn enables OEMs to build software-defined vehicles which can receive over-the-air software updates safely and securely.

“The shift to software-defined vehicles with centralized electronic architectures is accelerating, driving a need for more powerful and more energy-efficient compute platforms,” said Sam Abuelsamid, principal research analyst, Guidehouse Insights. “The virtualization, high-speed data transfer and massive processing performance of Nvidia Drive Thor can enable safer vehicles, better user experiences and potential new revenue streams.”