According to the US Combat Capabilities Development Command (DEVCOM) Army Research Laboratory, researchers have developed a novel data set, RELLIS-3D, that provides the resources needed to develop more advanced algorithms and new research directions. It is hoped these will enhance autonomous navigation in off-road environments.

DEVCOM researchers noted that existing autonomy data sets either represent urban environments or lack multimodal off-road data. Notably, autonomous navigation systems that rely solely on lidar laser ranging lack higher-level semantic understanding of the environment that could make path planning and navigation more efficient.

Dr Maggie Wigness from the Army Research Laboratory commented, “Urban environments provide many structural cues, such as lane markings, crosswalks, traffic signals, roadway signs and bike lanes, which can be used to make navigation decisions, and these decisions often need to be in alignment with the rules of the road. These structured markings are designed to cue the navigation system to look for something such as a pedestrian in a crosswalk, or to adhere to a specification such as stopping at a red light.”

Off-road data sets need to contain non-asphalt surfaces that present completely different traversability characteristics than roadways, added researcher Phil Osteen. For example, a vehicle might have to decide between navigating through grass, sand or mud, any of which could present challenges for autonomous maneuver. Tall grass versus short grass are also very different in terms of traversability, with tall grass potentially hiding unseen obstacles, holes or other hazards.

Additionally, while roadway driving is not universally flat, for the most part roads are relatively smooth and at least locally flat. Off-road driving can contain uneven hills and sudden drops, and there are more potentially dangerous conditions (a small nearby cliff, loose rocks, etc) that could cause unsafe decisions from a navigation planner.

“These sudden and constant changes in terrain require an off-road navigation system to constantly re-evaluate its planning and control parameters,” Wigness added. “This is essential to ensure safe, reliable and consistent navigation.”

According to Osteen, RELLIS-3D presents a new opportunity for off-road navigation and the data set can provide benefits in at least three ways. First, he said, it provides data that can be used to evaluate navigation algorithms, including simultaneous localization and mapping in challenging off-road conditions, such as climbing and then descending a large hill.

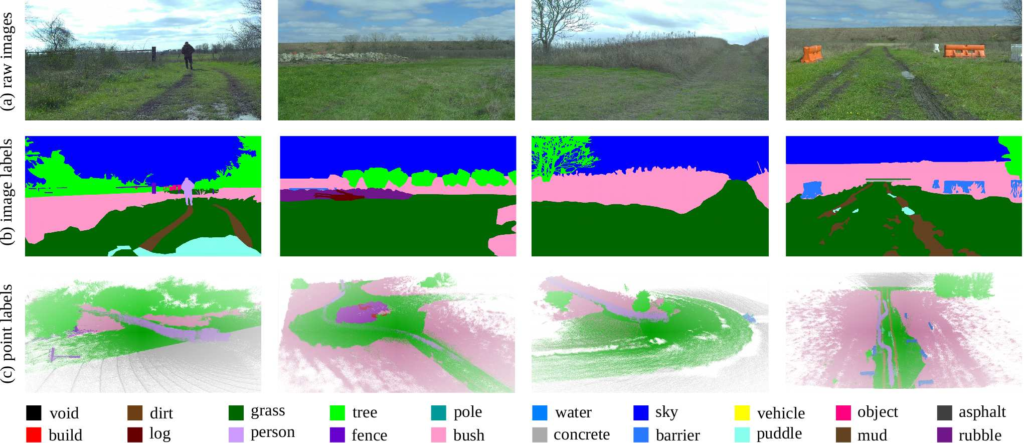

Second, it provides annotated lidar and camera data that can be used to train machine learning networks to better understand the terrain conditions in front of a robot, which can facilitate improved path planning based on semantic information.

Finally, Osteen noted, the combination of annotated data from active lidar and passive camera sensors may lead to algorithms that are trained with both sensor types, but are ultimately deployed in a passive-only mission.

Wigness stated that the laboratory has been working on developing these unstructured data set resources for the research community for the last couple of years, and RELLIS-3D is the next step.

For example, the Robot Unstructured Ground Driving, or RUGD, data set was publicly released in 2019. RUGD comprises annotations for imagery only, but was designed to provide the necessary resources to get the larger academic and industry researchers thinking about perception for autonomous navigation in off-road and unstructured environments.

RELLIS-3D takes the next step by publicly providing an entire sensor suite of data for off-road navigation. This will enable researchers to leverage multimodal data for algorithm development and can be used to evaluate mapping, planning and control in addition to perception components. Moving forward, the researchers plan to develop and test new systems to learn from multiple distinct data sets simultaneously, using the synchronized multimodal data to enhance some of their research on costmap generation for navigation; research developing algorithms that are better able to rapidly retrain and improve performance in never-before-seen environments; and research fusing data from both camera and lidar to leverage the strengths of each modality.