R&D organization Southwest Research Institute (SwRI) has developed off-road autonomous driving tools tailored for military, space and agricultural applications. The system, called Vision for Off-Road Autonomy (VORA), relies on stereo cameras and advanced algorithms, eliminating the need for lidar and active sensors.

“We reflected on the toughest machine vision challenges and then focused on achieving dense, robust modeling for off-road navigation,” said Abe Garza, a research engineer in SwRI’s intelligent systems division.

Traditional lidar systems emit detectable light, posing a risk in military scenarios. Similarly, radar emits waves that can be detected, and GPS signals can be jammed or blocked in certain terrains. VORA aims to address these issues by offering a passive sensing alternative that enhances stealth and reliability.

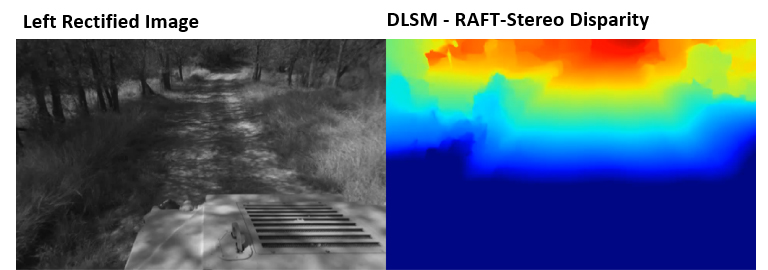

To achieve high precision in off-road environments, SwRI developed new software leveraging stereo camera data for tasks traditionally reliant on lidar, including localization, perception, mapping and world modeling. This includes the deep learning stereo matcher (DLSM), which utilizes a recurrent neural network to generate accurate disparity maps. The aim is to highlight motion differences between stereo images.

For simultaneous localization and mapping, SwRI engineered a factor graph algorithm that combines sparse data from various sources, such as stereo image features, landmarks, IMU readings and wheel encoders. Factor graphs are designed to enable autonomous systems to make informed decisions by analyzing interconnected variables.

Meera Day Towler, an SwRI assistant program manager who led the project, added, “We apply our autonomy research to military and commercial vehicles, agriculture applications and so much more.

“We are excited to show our clients a plug-and-play stereo camera solution integrated into an industry-leading autonomy stack.”

SwRI plans to integrate VORA technology into other autonomy systems and test it on an off-road course at SwRI’s San Antonio campus.