Exhibitors on Day 2 at ADAS & Autonomous Vehicle Technology Expo in Stuttgart are continuing to impress show visitors with their latest technologies to enable and accelerate end-to-end autonomous and ADAS applications, including testing tools, simulation, software, sensing and AI. Highlights include aiMotive, AVL, Elektrobit, Klas, Marelli Automotive Lighting and XenomatiX, Tallysman and Velodyne.

The show is taking place now at Messe Stuttgart, Germany, and finishes tomorrow, June 23, 2022. It’s not too late to attend! You can still register for your free entry pass by clicking here.

Automated driving full software stack with reusable, model-space-based perception

aiMotive is showcasing the latest generation of its automated driving full software stack, aiDrive 3.0, featuring in-house, model-space-based perception, which the company says is the foundation for higher-level automation but achieves much more than that. It is an innovative system for the data-driven evolution of both direct model-space-based perception and multi-sensor fusion, supported by a fully automated annotation pipeline.

aiMotive is showcasing the latest generation of its automated driving full software stack, aiDrive 3.0, featuring in-house, model-space-based perception, which the company says is the foundation for higher-level automation but achieves much more than that. It is an innovative system for the data-driven evolution of both direct model-space-based perception and multi-sensor fusion, supported by a fully automated annotation pipeline.

The company is showing a front-camera ADAS application of aiDrive 3.0 running on Texas Instruments’ TDA4. All ADAS/AD features require a 3D representation of the vehicle’s surrounding environment – the model space – to perform decision making and path planning. Model-space-based perception supports this basic requirement by creating a 360° 3D model space directly from the fusion of data from multiple cross-modality sensors, meaning the overall ADAS/AD feature stack achieves greater clarity. “This represents a fully data-driven evolution, including multi-sensor fusion, which reduces complexity and computing resource needs,” revealed Gábor Pongrácz, aiDrive senior vice president, live at the expo.

aiMotive claims to provide more than just software, preferring to think of itself as a reusable and efficient data and simulation pipeline with the primary goal of decreasing an automotive player’s time-to-market. aiSim, its end-to-end validation suite, accelerates the evolution of aiDrive’s features, while aiDrive ensures aiSim can validate complex automated driving solutions. In addition, aiData, the company’s proprietary data pipeline, provides the foundation on which its other solutions can build, and reduces the complexity of processing by using high automatization while ensuring automotive quality.

Validating the safety of any system requires processing vast amounts of validation data, but aiMotive’s fully automated data pipeline, paired with its perception solution, reduces overall effort and the cost of validation data management, ensures the capability of continuous improvement and a fast time-to-market while keeping the cost for manual annotation as low as possible.

AVL showcases its ADAS/AD portfolio, validation toolchain and proving ground

AVL is exhibiting its complete ADAS/AD portfolio, from ADAS/AD engineering services to ground truth data generation, data management and analytics, scenario-based test preparation and virtual testing solutions with its steering force emulator. The company’s verification and validation toolchain is modular and enables test preparation, execution and evaluation across multiple test environments and strategies, facilitating simulation and virtual homologation.

AVL is exhibiting its complete ADAS/AD portfolio, from ADAS/AD engineering services to ground truth data generation, data management and analytics, scenario-based test preparation and virtual testing solutions with its steering force emulator. The company’s verification and validation toolchain is modular and enables test preparation, execution and evaluation across multiple test environments and strategies, facilitating simulation and virtual homologation.

The company is also presenting its proving ground, which gives customers additional flexibility for ADAS/AD testing. The testing facility combines the conventional dynamic test elements with advanced modules such as the dedicated ADAS surface with local ADAS test services, and the 15ha, forward-looking smart city zone.

AVL is a leading system provider and offers engineering and testing solutions from a single source. Customers benefit from its seamless scenario-based testing framework, including simulation, vehicle-in-the-loop and real-world testing. It provides AI-based methods for scenario extraction and tools for scenario creation and management; comprehensive methods for test case generation and optimizing test coverage, such as ontology and real road statistics; scalable big data solutions with built-in AI-based algorithms to enable data-driven development; and up-to-date regulatory standard scenario content packages for NCAP, EU-GSR and UNECE ALKS.

The company’s strong engagement in regulatory and standardization bodies accelerates the safe deployment of ADAS/AD systems.

“At AVL, we have been working for several years on a broad portfolio covering tools for scenario-based virtual testing of ADAS and AD systems. Major cornerstones in this toolchain are the test planning tools and supporting various testing execution methods, especially vehicle-in-the-loop on testbeds. Last but not least, we are showing our ground-truth measurement system and sensor benchmarking system for open-road testing. In the vehicle-in-the-loop family, we are proud to show our new Steering Force Emulator that allows ADAS vehicles on the testbed to experience steering forces in a simulated environment,” explained Roland Lang, AVL’s program manager for ADAS and AD business development, live at the show.

Simplified ADAS testing with model-based, virtual development

Elektrobit is in Stuttgart to share how it can help simplify the development process in ADAS testing with model-based and virtualized development. It aims to help companies develop profitable embedded software programs that will validate test drive scenes and avoid expensive simulation.

Elektrobit is in Stuttgart to share how it can help simplify the development process in ADAS testing with model-based and virtualized development. It aims to help companies develop profitable embedded software programs that will validate test drive scenes and avoid expensive simulation.

The company claims its solutions are unique, as they reduce bottlenecks in the testing and validation process because bugs in coding can be detected and fixed early in the ECU.

Elektrobit is also using the expo as a platform to share more about its EB Assist ADTF partner program, an active collaboration of companies specialized in sensors, virtual test drives, automotive bus interfaces, datalogging and more. Customers can benefit from developed interfaces and filters of software and sensors and are much more interlinked with EB Assist ADTF.

Elektrobit welcomes companies who want to contribute to the development of ADAS and automated driving systems through EB Assist ADTF.

Open-architecture solution for rapid prototyping and AD development

Klas has announced at the expo that its RAVEN (Ruggedized Autonomous Vehicle Network) open-architecture solution for rapid prototyping and development of ADS and ADAS now supports logging from GMSL2 cameras.

Klas has announced at the expo that its RAVEN (Ruggedized Autonomous Vehicle Network) open-architecture solution for rapid prototyping and development of ADS and ADAS now supports logging from GMSL2 cameras.

The company is showcasing the capabilities of RAVEN’s TRX D8 datalogger, which boasts write speeds of up to 7Gb per second and a storage capacity of 240TB, which Elektrobit claims make it the fastest datalogger on the market for its compact size.

All systems are integrated to accelerate development. The modular solution eliminates the need to build cumbersome test rigs and source equipment from multiple vendors, saving time and cost. Developers can connect ruggedized components back-to-back, eradicating complex cabling and potential failure points. Its modules include 10GbE interfaces that are interconnected by a high-performance network switch with a 121Gbps backplane, removing network bottlenecks.

RAVEN’s open and flexible architecture enables multiple AV developers to easily create, build and run autonomous driving stacks in-vehicle, saving costs and test vehicle setup times.

Its high-performance compute supports Nvidia GPUs, allowing developers to train vision detection algorithms live during test drives, thereby reducing engineering cycles and time. SerDes logging captures GMSL2 camera data with minimal intrusion on the data transmission, ensuring vehicles can continue to operate safely. Native automotive Ethernet support makes it easier to connect to the next generation of sensors and ECUs, maximizing return on investment through reusability in future use cases.

RAVEN supports a virtualization layer, allowing multiple test stacks to be run simultaneously. No additional hardware is needed while new systems are being designed, eliminating weeks of procurement effort and downtime waiting on vehicle hardware delivery. It also supports a 40Gbps networking trunk, maximizing throughput from the development platform to external networks, resulting in faster access to real-world data.

“The ability to intelligently harvest data at the automotive edge is key to delivering safe, driverless experiences,” explained Frank Murray, CTO of Klas. “With RAVEN, not only can driving stacks be developed faster, but by leveraging its compute, real-world evaluations can be done in a closed system, ensuring test vehicles and other road users remain safe as the software evolves.”

Full solid-state lidar modules boast next-level sensing

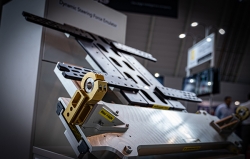

Marelli Automotive Lighting and XenomatiX have combined their expertise in optics and electronics to create a new line-up of lidar modules for autonomous vehicles, which they are presenting at the expo.

Marelli Automotive Lighting and XenomatiX have combined their expertise in optics and electronics to create a new line-up of lidar modules for autonomous vehicles, which they are presenting at the expo.

Marelli is a Tier 1 lighting supplier. Its lidar has been designed primarily for the front or four corners of a car, as a standalone system or integrated into a smart corner or smart grille to achieve short-, mid- and long-range distance measurements.

Marelli aims for a stylish aesthetic while offering greater integration to support weight reduction and faster assembly and calibration time for sensors on the assembly line, embedding cleaning and defrosting solutions seamlessly and protecting the sensors from shocks and impacts.

According to the company, modules offer better integration because they take up less space than larger, non-full-solid-state lidar, such as MEMS or mechanical-based sensors. Full solid-state technology also offers greater safety and total immunity to shock, a more robust design with no mechanical failures, more relaxed assembly requirements and no blur or ghost effect (global shutter approach).

Using a CMOS-based receiver, Marelli can also capture a video flow along with the rich point cloud output of the lidar. There is no point of view difference in its ground truth data acquisition. It can also achieve faster calibration and self-recalibration.

Furthermore, using the same base technologies is key to improving performances over time and reducing development costs. Both VCSEL and CMOS will continue to become more efficient, allowing for new generations of more effective lidar modules.

Marelli is showing expo visitors how the lidar’s exterior design reveals the company’s experience in daily outer lens production, including stone impact protection and handling mechanical assembly for large production volumes. Meanwhile, its interior displays the company’s skills in optics, thermal management, automotive-grade electronics and software.

Advanced triple-band antenna and smart GNSS signal splitter provide RTK and PPP accuracy

Tallysman is at ADAS & Autonomous Vehicle Technology Expo in Stuttgart to demonstrate its RTK (real-time kinematics) and PPP (precise point positioning) accurate antennas, featuring a tight radio frequency phase center variation (L1/L2/L5, etc), strong multipath mitigation, wide bandwidth to support all constellations and their spread spectrum signals, as well as deep filtering to mitigate near band signals.

Tallysman is at ADAS & Autonomous Vehicle Technology Expo in Stuttgart to demonstrate its RTK (real-time kinematics) and PPP (precise point positioning) accurate antennas, featuring a tight radio frequency phase center variation (L1/L2/L5, etc), strong multipath mitigation, wide bandwidth to support all constellations and their spread spectrum signals, as well as deep filtering to mitigate near band signals.

According to Tallysman, the placement of a GNSS antenna in an automotive application is critical. Key considerations include the facts that surface currents on a metallic roof can interfere with the weak GNSS signals, poor implementation can increase reflections from below and above the antenna, and proximity to other radio frequency transmitters or digital devices/cables can degrade the GNSS performance.

Tallysman’s ADAS-class GNSS system (receiver, antenna and corrections) can provide positions accurate to ~0.1m. This positioning information will be available to the sensor fusion algorithm to enable lane keeping and situational awareness and provide navigation information.

Tallysman’s automotive-grade TWA928LXF antenna supports all GNSS constellations (GPS (QZSS)/GLONASS/Galileo/Beidou and Navic). The TWA928LXF is a triple-band antenna and also supports L-Band corrections.

Tallysman also manufactures a full-band machine control GNSS antenna that supports all the frequencies and signals listed above and adds QZSS (L6), Galileo (E6) and Beidou (B3) support. Galileo and QZSS broadcast GNSS corrections in the (L6/E6) frequency band (1,257-1,300MHz). Galileo’s GNSS (GPS/Galileo) High Accuracy Service correction service will provide global coverage and accuracy of <0.2m (95% CI after convergence). The QZSS regional GNSS constellation will also broadcast Centimeter Level Augmentation Service (CLAS) correction in the L6 frequency band.

Furthermore, when a user (vehicle) is out of cellular coverage, the worldwide Iridium Low Earth Orbit (LEO) Communication constellation is used. Commercial off-the-shelf modems and phones are available from Tallysman. Iridium antennas are offered in active (receive only) and passive (transmit and receive) models and must be certified by Iridium.

Solid-state lidar for accurate near-field perception and more

Velodyne Lidar EMEA is presenting its latest hardware and software solutions at the expo. Its Velarray M1600 solid-state sensor has been designed for autonomous applications in public, commercial and industrial environments and is optimized for accurate near-field detection for autonomous platforms.

Velodyne Lidar EMEA is presenting its latest hardware and software solutions at the expo. Its Velarray M1600 solid-state sensor has been designed for autonomous applications in public, commercial and industrial environments and is optimized for accurate near-field detection for autonomous platforms.

The sensor has outstanding near-field perception for safe navigation in a variety of environmental conditions in a detection range of 0.1-30m, with 10% reflectivity in maximum range and a wide 32° vertical field of view that enables smooth operation, even in areas with a lot of movement.

Velodyne is also showcasing its Vella software and Vella Development Kit (VDK). VDK enables customers to connect the lidar sensors to a standard library of functions. In addition, it offers a wide range of opportunities to drive the development of solutions for constantly evolving applications and bring them to market.

Velodyne has been in discussions with various automotive players who all agree that lidar technology is crucial in the sensor mix for future autonomy. Indeed, the company’s sensors have long been an integral part of many autonomous shuttle programs worldwide.

The company claims that lidar technology has many advantages over other technologies. For instance, lidar simulations accelerate system development and deployment by delivering a virtual environment to test automated driving capabilities in a range of roadway, weather and lighting conditions. Simulations support testing of extreme scenarios including corner and edge cases, and potential hazards such as emergency braking and obstacle avoidance.

At the expo, Velodyne Lidar is also revealing more about its Intelligent Infrastructure Solution (IIS) that combines AI and lidar. This breakthrough hardware+software technology is designed to solve some of the most challenging and pervasive infrastructure problems.

With the company’s lidar sensors combined with Bluecity’s powerful AI software, users can monitor traffic networks and public spaces to generate real-time data analytics and predictions. This will improve traffic and crowd flow efficiency, advancing sustainability and protecting vulnerable road users in all weather and lighting conditions.