At ADAS & Autonomous Vehicle Technology Expo in Stuttgart, exhibitors are currently showcasing the latest technologies to enable and accelerate end-to-end autonomous and ADAS applications, including testing tools, simulation, software, sensing and AI. There is an incredible amount of innovation everywhere you look and the first day has already seen new launches and cutting-edge technology across the floor, including the latest from Automotive Artificial Intelligence (AAI), AB Dynamics, AIMMO, Applied Intuition, Cognata, OTSL and Siemens.

The show is taking place at Messe Stuttgart, Germany, June 21, 22, 23, 2022, and is completely free to attend. If you haven’t already done so, register now for your free entry pass!

ReplicaR of the World simulation platform

On the opening day of ADAS & Autonomous Vehicle Technology Expo in Stuttgart, Automotive Artificial Intelligence (AAI) is demonstrating its live knowledge and data-driven approaches to its simulation platform, ReplicaR of the World, which combines vehicle data with synthetic data. Built from real-world data consisting of virtual maps, 3D scenes and traffic around the test vehicle, it provides fast, safe and cost-efficient solutions for near-to-real-life MIL, SIL, HIL and VIL testing.

On the opening day of ADAS & Autonomous Vehicle Technology Expo in Stuttgart, Automotive Artificial Intelligence (AAI) is demonstrating its live knowledge and data-driven approaches to its simulation platform, ReplicaR of the World, which combines vehicle data with synthetic data. Built from real-world data consisting of virtual maps, 3D scenes and traffic around the test vehicle, it provides fast, safe and cost-efficient solutions for near-to-real-life MIL, SIL, HIL and VIL testing.

Using multiple use cases for OEMs, Tier 1s and companies developing autonomous driving stacks or hardware, AAI representatives are using the expo to show that real-world data gives a good base for testing but is limited to recorded scenarios. Using machine learning methods, AAI’s solutions greatly increase the database of possible scenarios in a relatively short time.

To meet the current ALKS 157 regulation, automotive companies now need to combine real-world data with the simulation world. AAI thus generated automatic ‘quasi ground truth’ with petabytes of data, while leaving an option open for creating a ground truth.

The company’s unique platform is modular, highly integrable and has open interfaces, giving customers flexibility to build their simulation ecosystem. They can also define their own operation design domains (ODDs) and make products ready for approval from authorities. Test results are used to optimize and re-test algorithms quickly; resulting scenarios extend test case coverage within a very short time, making scenario-based testing in simulation as well as real-world test drives much more efficient.

This complete testing of systems enables much more controlled, structured and secure analysis possibilities. Real-world tests are reduced, saving customers time, personnel and hardware resources.

Highly immersive, compact aVDS-S from AB Dynamics accelerates ADAS development

AB Dynamics presented its compact aVDS static driving simulator (aVDS-S) on Day 1 of the show. It features an active brake pedal and steering force feedback system for enhanced immersion, meaning it can be used for more advanced applications than traditional static simulators, such as regenerative braking control strategies and power steering calibration.

AB Dynamics presented its compact aVDS static driving simulator (aVDS-S) on Day 1 of the show. It features an active brake pedal and steering force feedback system for enhanced immersion, meaning it can be used for more advanced applications than traditional static simulators, such as regenerative braking control strategies and power steering calibration.

It is different from other driver-in-the-loop simulators as it includes in-house developed and manufactured haptic feedback devices, designed to minimize magnetic cogging torque rather than using off-the-shelf solutions. More compact than a dynamic alternative, the aVDS-S can be installed in a typical office environment without requiring a purpose-built room.

Engineers can significantly accelerate ADAS technologies by front-loading the development process and adding a driver-in-the-loop early on, advancing the design before real-world tests are conducted. This reduces the number of prototypes required and time spent in the development phase, making it more cost-effective.

Virtual environments from leading software supplier rFpro have not only enhanced driver immersion but also the development of ADAS and AV technologies through physically modeled, accurate visuals.

Euro NCAP, government test authorities and OEMs are moving toward virtual validation through compact, cost-effective driver-in-the-loop simulators, as vehicles and the regulatory tests required for type approval are becoming increasingly complex and the number of vehicles requiring type approval is rising.

“Through the aVDS-S, organizations that do not yet have the absolute requirement, or associated budget, for a highly advanced dynamic driving simulator, such as our aVDS, can still realize many of the benefits of meaningful human involvement early in the vehicle development process,” explained Dr Adrian Simms, AB Dynamics’ business director of laboratory test systems.

At the adjoining Automotive Testing Expo, the company is also presenting its realistic and durable ADAS targets. Developed by DRI, part of the AB Dynamics Group, the DRI Soft Motorcycle 360 features speed-matched rotating wheels, meaning the dummy is reliably recognized as a motorcycle by cameras, radar and lidar.

Deep learning AI inspection to speed data curation and labeling

AIMMO is in Stuttgart to launch its Smart Labeling technology to the European market, claiming it can significantly reduce the time between data acquisition and availability of high-quality training data, enabling new scenarios, locations and edge cases to be trained faster.

AIMMO is in Stuttgart to launch its Smart Labeling technology to the European market, claiming it can significantly reduce the time between data acquisition and availability of high-quality training data, enabling new scenarios, locations and edge cases to be trained faster.

The use of deep learning algorithms to support the labeling process reduces the time required to provide results back to the OEM. Areas where challenges are found during testing and validation can be overcome quickly, progressing the program at pace.

Repetitive work is lessened, and if deep learning is applied from the data collection stage, the entire autonomous driving data process can be shortened. David Marks, head of sales (Europe) at AIMMO, has revealed live at the expo that the company’s self-driving data collection vehicle is being developed in this direction.

He pointed out that many companies are focusing on labeling automation, which is important, but mainly because up-front decisions on which data to use as training data can have a huge impact on budgets and schedules.

“AIMMO’s deep learning based AI inspection can speed up the data curation process by up to 50% and data labeling up to 80% faster,” emphasized Marks. “Repetitive and easy class processing is already yielding excellent results; the results for more complex class processing are increasing continually as the deep learning model evolves.”

He continued, “Any human-only-based approach carries the risk of error. To process the vast amounts of data required for autonomous driving, the workload is shared across multiple annotators; results are then quality checked by multiple inspectors. AI doesn’t suffer from these challenges, so Smart Labeling is more consistent. The human-in-the-loop finalizes the labeling process and, following inspection, the new ground truth can be used to further train the AI, increasing its reliability and accuracy.”

Doyle Chung, head of global strategy, agreed: “Too many smart people who should be coding algorithms are spending untold time generating training data to test and validate their work. AIMMO takes this pain away and provides a fast, data-rich and highly accurate source of structured data so AI solutions can take root.”

Synthetic sensor data platform provides high-fidelity training data in real time

Applied Intuition is presenting its synthetic sensor data platform that reduces the complexity and cost of data collection and manual labeling on Day 1 in Stuttgart. Its synthetic data sets enable ML engineers to immediately define and generate labeled data when they discover a shortcoming in their model, solving problems in days.

Applied Intuition is presenting its synthetic sensor data platform that reduces the complexity and cost of data collection and manual labeling on Day 1 in Stuttgart. Its synthetic data sets enable ML engineers to immediately define and generate labeled data when they discover a shortcoming in their model, solving problems in days.

Training data is usually collected to address a specific issue identified in model analysis. As most real data is annotated manually, if the issue is due to a rare but important occurrence, it can cost dollars per frame for annotations such as semantic segmentation and take over a month for large batches of data. Human errors also degrade model performance.

Other annotations, such as optical flow for camera images, are created using another sensor modality such as lidar to obtain additional information when collecting the raw data. However, this approach is vulnerable to sensor calibration issues and is limited by the density of data produced by the additional sensor, which, in the case of lidar, is significantly less dense than a camera image.

With synthetic data sets, annotations are dense and error-free because they are generated programmatically with exact knowledge of the simulated world. Data sets with millions of examples of an edge case can be generated without spending years collecting examples. Additionally, randomization can be used to discover edge cases not yet observed in the real world, which can then be addressed before on-road failures occur.

“Production synthetic data set technologies enable autonomous vehicle perception and localization developers to generate, in real time, the specific labeled data that they need,” explained Chris Gundling, head of sensor simulation at Applied Intuition.

“Synthetic data can be generated at scale, without the need for human annotators, and can touch on conditions that are rare but critical to real vehicles on the road. The latest technology in rendering, GANs and sensor physics modeling has increased the fidelity of synthetic data to a point where significant gains in training and a minimal, manageable domain gap in inference are achieved,” Gundling concluded.

Cloud platform enables large-scale regression testing and more

Cognata is displaying a new Cloud platform that can manage data from simulations and real-world driving, as well as provide a full ADAS lifecycle management platform, on the first day of ADAS & Autonomous Vehicle Technology Expo, in Stuttgart, Germany.

Cognata is displaying a new Cloud platform that can manage data from simulations and real-world driving, as well as provide a full ADAS lifecycle management platform, on the first day of ADAS & Autonomous Vehicle Technology Expo, in Stuttgart, Germany.

The platform enables large-scale regression testing, training perception pipelines, scenarios classification and failures analysis to be performed.

“Our simulation provides two main use cases: smart large-scale regression testing for validating ADAS functions, and synthetic data for training perception and control stacks,” explained Cognata’s CEO and founder, Danny Atsmon. “Cognata’s user base is actively using the platform to solve both use cases.”

The company is also in Stuttgart to show off its full range of simulation tools, which Atsmon claims provide best-in-class sensor rendering, diversified 3D scenes for ADAS, AV, off-highway and warehouse logistics applications, and the most advanced AI for traffic simulations. “Cognata has a unique database of tens of thousands of interesting scenarios and scenes combinations,” he concluded.

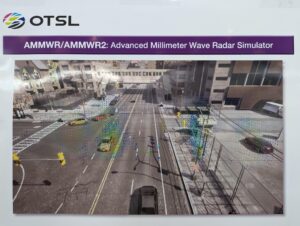

3D millimeter-wave radar simulator for autonomous driving

OTSL is in Stuttgart to unveil its new 3D real-time millimeter-wave radar simulator for autonomous driving, the AMMWR2 (Advanced Millimeter Wave Radar Simulator 2).

OTSL is in Stuttgart to unveil its new 3D real-time millimeter-wave radar simulator for autonomous driving, the AMMWR2 (Advanced Millimeter Wave Radar Simulator 2).

Since introducing AMMWR to the market in 2017 as the world’s first sensor simulator software for autonomous driving that enables dynamic real-time simulation, OTSL has continued to invest in its development. With the announcement of AMMWR2, the company has manifested the leaps in functionality and performance achieved in this development.

OTSL plans to market the AMMWR2 worldwide by the end of 2022 to automotive manufacturers, semiconductor manufacturers developing sensor devices, and system supply manufacturers developing, designing and producing vehicle sensors.

“With the global advance in application of autonomous driving, expectations are growing for advanced simulation technology capable of creating a virtual reproduction of all kinds of driving conditions, and verifying and validating safety and accuracy,” explained Shoji Hatano, CEO, OTSL and OTSL Germany. “However, the current autonomous driving vehicle has only Level 2 or Level 3 functions under the SAE International standards.”

Hatano continued, “To achieve fully autonomous driving via system monitoring, Level 5 of the standards, it is essential to include simulation of potential accidents caused by electronic device defect and sensor failures. AMMWR2, announced on June 17, is the only millimeter-wave radar sensor simulator that covers from the electronic device level, including semiconductors and sensors, to the autonomous driving (AD) and advanced driver assistance system (ADAS) simulation domain.”

Make sense of massive amounts of automated driving data with Simcenter Scaptor

Siemens is in Stuttgart to display its Simcenter Scaptor (sensor capture), which provides high-speed in-vehicle recording with a scalable and flexible hardware platform to capture, synchronize and store vast amounts of raw data under rugged test conditions.

Siemens is in Stuttgart to display its Simcenter Scaptor (sensor capture), which provides high-speed in-vehicle recording with a scalable and flexible hardware platform to capture, synchronize and store vast amounts of raw data under rugged test conditions.

“When testing against relevant scenarios, typically a lot of time is lost on finding the appropriate scenarios to test against,” explained Robbert Lohmann, business development director of AV at Siemens.

“Scaptor provides an integrated working toolchain from recording to data annotation and storage. The solution ensures that engineers can focus on actual testing instead of spending time on finding the right data.”

Data can be structured using advanced automatic annotation to boost efficiency and productivity. This gives the ability to find incorrect tags and data gaps to fill, and to retrieve the appropriate scenarios for tests at any time. It directly improves productivity because less time is spent searching for the right data and more time is spent using it to support algorithm development.

“Scaptor incorporates the leading b-plus technologies hardware, allowing it to bit-accurately capture, synchronize and store vast amounts of raw data under rugged test conditions,” continued Lohmann. “This is done for multiple data streams coming from radar, lidar, high-resolution cameras and automotive buses and networks. When and where no edge cases are encountered during the recording, the data is still valuable as the basis of Siemens’ mathematical approach to be able to identify unknown, unsafe scenarios.”

Scaptor also applies deep natural anonymization to captured videos and images to ensure they can still be used for the training of machine learning models. This technology retains relevant information such as facial expression or line-of-sight throughout the anonymization process, while ensuring no identity match is possible based on automatic recognition.

“Through Simcenter Scaptor we bring value to data, from recording to annotation, analysis and anonymization – combining the experience and leading solutions of expert parties,” added Robin van der Made, director of product management at Siemens, live on Day 1. Van der Made is also presenting on Day 3 at the Autonomous Vehicle Test & Development conference track.