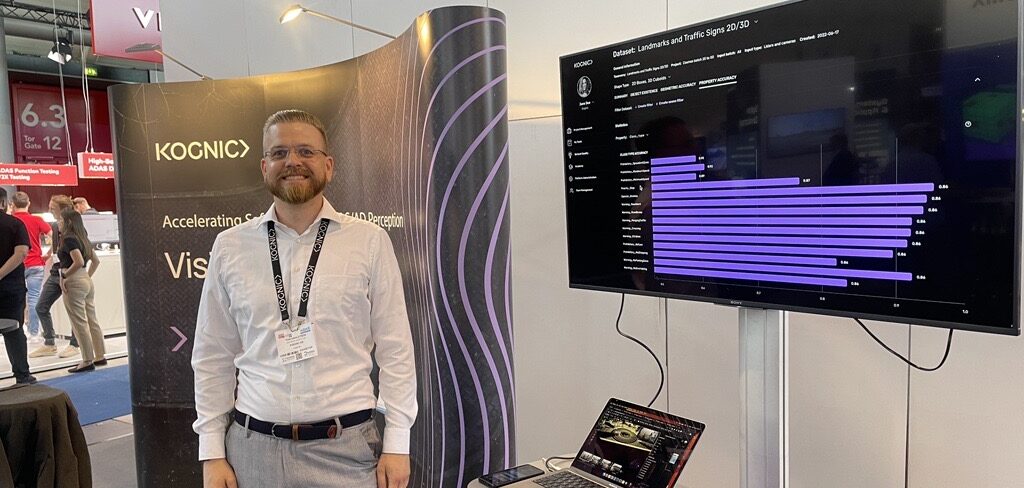

The first day of ADAS & Autonomous Vehicle Technology Expo has seen a great deal of interest in Kognic’s multisensor data labeling capabilities, which ensure multiple sensors can be annotated at the same time, saving time and reducing the cost of producing data.

The new feature recognizes how data collection vehicles come equipped with five or more lidars and even more cameras and radars. Encountered objects need to be represented in each individual sensor’s data – with consistent object IDs across the whole data set. Repeating the annotation process for each image/point cloud is tedious and inefficient, but automotive-grade automation solutions have not been available – until now.

Motion- and timestamp-compensated multisensor labeling allows teams to annotate as many cameras and point cloud sensors as needed, ensuring the spatial and temporal calibration required for proper interpolation. The whole process works automatically and immediately serves up additional, consistent data labels with only a single manual annotation required.

“The mathematics behind it all were not so difficult,” explains Henrik Arnelid, machine learning engineer and product developer at Kognic. “However, making all these on-demand predictions and calculating where the object should be – that was the tough assignment. In order to improve workflows, you have to think ‘outside the bounding box’, so to speak!”

Find out more at Booth 6104.