Tony Gioutsos, director of portfolio development for autonomous at Siemens, talks us through a simulation-based verification and validation procedure of AVs that’s truly robust, in advance of his presentation at the Autonomous Vehicle Test & Development Symposium.

Tony Gioutsos, director of portfolio development for autonomous at Siemens, talks us through a simulation-based verification and validation procedure of AVs that’s truly robust, in advance of his presentation at the Autonomous Vehicle Test & Development Symposium.

What do you see as the ideal balance and relationship between simulation and real-world testing for AV and ADAS?

I’ve been involved with automotive algorithms for 30 years and my experience tells me that we should have roughly a 100,000 to 1 ratio of simulation to real data. However, this is dependent on being able to fully rely on the real data. For simulation data, we can settle on slightly less than 100%, but the real-world data must be 100% reliable.

In other words, if a real event occurs, the system must act appropriately – exactly. That real event can then be simulated in something like 100,000 variations. The system should then act correctly in 99,500 of these variations, as the remaining 500 scenarios are likely to be irrelevant or not realistic. Of course, if all 100,000 variations work perfectly, then the HAV system becomes even more robust and resilient. If it can deal with these extraordinary events, we can be sure it will be able to navigate real-world events it has not encountered before.

What is the background to your presentation?

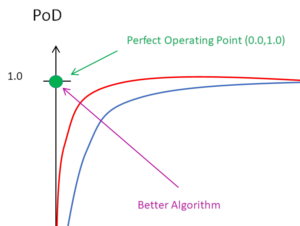

Verification and validation (V & V) of highly automated vehicles (HAV) requires the use of simulation on a massive scale to create a design of experiments (DOE) and Monte Carlo series of tests. These test results can then be correlated to desired results by using standard Estimation and Detection principles. As an example, for a detection case such as automatic emergency braking, a receiver operating characteristic (ROC) curve – where the true positive rate is plotted against the false positive rate at various threshold settings – can be computed. This then creates a V & V of the AEB system.

Run us through the process

To start this process, we must start with a series of appropriate scenarios, because they are very important in establishing the ‘base’ set of tests that our system must analyze and work from. As this baseline, we will choose a number of edge cases from a wide variety of sources such as Euro NCAP, GIDAS, CIDAS, Streetwise, MCity ABC, ASAM. Once this base set is defined, we can move on to sensor modeling.

The next step in the process involves correctly modeling the sensors, as well as the associated perception and control algorithms. With accurate, physics-based sensor models, and SIL, HIL, DIL or VIL, we vastly reduce the need for real-world testing. For creating the models themselves, we focus in particular on the detailed physics models required to simulate camera, radar, lidar and V2X.

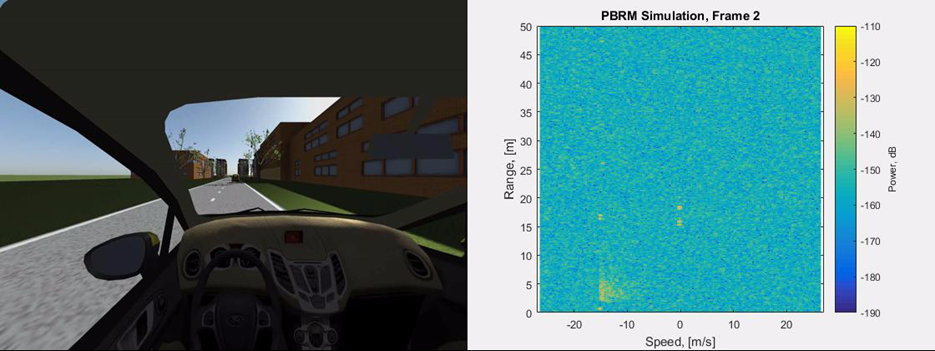

In the image is an example of a detailed physics-based radar model. The left side of the image is the camera view of a vehicle as it drives through a scene. The right side is what is commonly called a range-Doppler image of the same scene. The range-Doppler image is a somewhat processed version of the raw radar data. It plots the radar cross-section against the range and relative velocity to the ego vehicle. We then correlate this to real data by working with various sensor manufacturers and suppliers.

Once the sensor models are chosen, deterministic and stochastic variations can be used to widely vary the scenario database. This will create massive variations that help produce the V&V framework for analysis. We can vary factors such as the sensor noise, actor trajectories, weather and lighting variation, road marking quality, sensor mounting, and much more.

These variations should be orchestrated with tracking in place so that situations with unexpected results can be played back. This is an important step, as only analyzing the results and correlating them to a set of requirements can lead to a proper V&V of the entire HAV system.

In addition to the generic HAV system with its world and sensor models, ‘digital twins’ of the vehicle, occupants and tires should also be added to the process. The tires are the only part of the vehicle touching the ground and play an important part of the overall analysis. Finally, the occupants in the vehicle are what matter most, so when the HAV system does not meet expectations, it must be immediately apparent what the impact on the occupants will be.

What new technology is enabling this simulation approach?

We have recently updated our core tool, the Siemens Simcenter PreScan, with more advanced sensor modeling capabilities. It now includes detailed physics-based sensor models that allow the user to model a sensor from start to finish. We have also added an API framework that allows simple variation changes to be implemented in a seamless way. In addition, PreScan is now able to operate in a cloud or high-performance cluster environment to make massive computations possible.

What are the main issues and challenges in simulation today and how can they be solved?

Probably the biggest issue today is sensor modeling. In other words, making your simulated sensor data match real sensor data. At Siemens, we feel that we have the best sensor models in the industry, so we are certainly getting closer and closer to solving the issue.

The other major concern is processing time. To get it down to manageable levels you need hardware that is up to the job. GPUs for sensor simulation are a particular bottleneck and as a result, they are currently the main thing limiting the processing of massive simulations.

Catch Tony’s presentation titled “A massive simulation approach to verify and validate AV systems” at the Autonomous Vehicle Test & Development Symposium. For more information, up-to-date programs and rates, click here.

Catch Tony’s presentation titled “A massive simulation approach to verify and validate AV systems” at the Autonomous Vehicle Test & Development Symposium. For more information, up-to-date programs and rates, click here.