Launched during Nvidia’s annual GTC event, Nvidia DRIVE Hyperion 8 is a computer architecture and sensor set for full self-driving systems. Designed for the highest levels of functional safety and cybersecurity, the platform is supported by sensors from a wide range of leading vendors, including Continental, Hella, Luminar, Sony and Valeo. The self-driving system will be available for all 2024 vehicle models.

The production platform is designed to be open and modular, so customers can easily use what they need – from core compute and middleware all the way to NCAP, Level 3 driving, Level 4 parking and AI cockpit capabilities.

During the GTC keynote, Nvidia founder and CEO Jensen Huang showed a DRIVE Hyperion 8 vehicle drive autonomously from the Nvidia headquarters in Santa Clara, California, to Rivermark Plaza in San Jose.

DRIVE Hyperion is also scalable, with the current standard DRIVE Orin platform designed to be upgradeable with Nvidia DRIVE Atlan and DriveWorks APIs that are compatible across generations.

With a functionally safe AI compute platform at its core, DRIVE Hyperion provides a secure base for autonomous vehicle development. Two DRIVE Orin systems-on-a-chip provide redundancy and fail-over safety, as well as ample compute for L4 self driving and intelligent cockpit capabilities.

The DRIVE Hyperion 8 developer kit also includes Nvidia Ampere architecture GPUs. This high-performance compute delivers ample headroom for developers to test and validate new software capabilities.

The Nvidia DriveWorks Sensor Abstraction Layer streamlines sensor setup with easy-to-use plugins, while DRIVE AV Software contains the deep neural networks for perception, mapping, planning and control.

Sensor setup

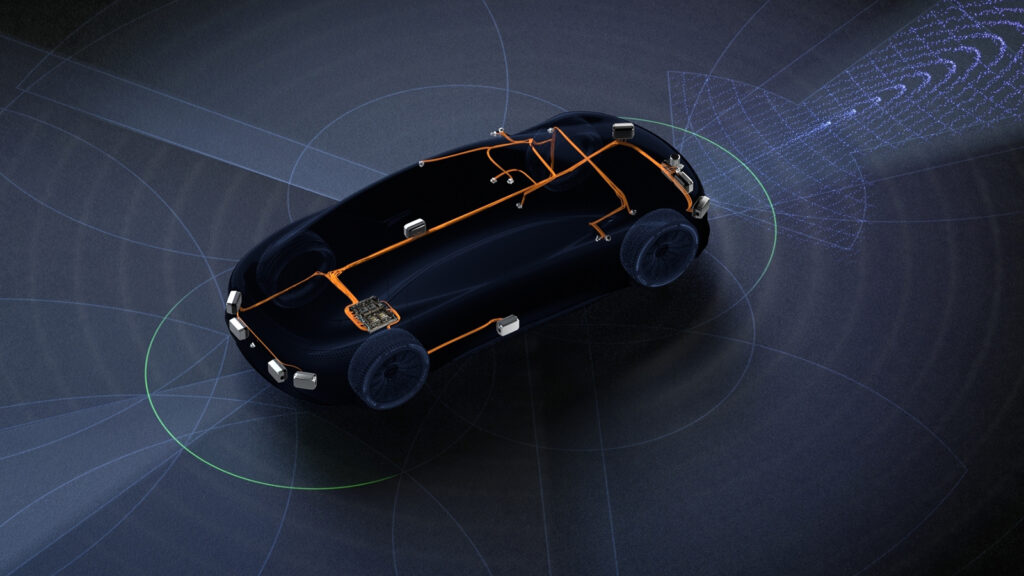

By including a complete sensor setup on top of centralized compute and AI software, DRIVE Hyperion provides everything needed to validate an intelligent vehicle’s hardware on the road.

Its sensor suite encompasses 12 cameras, nine radars, 12 ultrasonics and one front-facing lidar sensor. And with the adoption of best-in-class sensor suppliers coupled with sensor abstraction tools, autonomous vehicle manufacturers can customize the platform to their individual self-driving solutions.

This open, flexible ecosystem ensures developers can test and validate their technology on the exact hardware that will be on the vehicle.

The long-range Luminar Iris sensor will perform front-facing lidar capabilities, using a custom architecture to meet the most stringent performance, safety and automotive-grade requirements.

“Nvidia has led the modern compute revolution, and the industry sees them as doing the same with autonomous driving,” said Austin Russell, founder and CEO of Luminar. “The common thread between our two companies is that our technologies are becoming the de facto solution for major auto makers to enable next-generation safety and autonomy. By taking advantage of our respective strengths, auto makers have access to the most advanced autonomous vehicle development platform.”

The radar suite includes Hella short-range and Continental long-range and imaging radars for redundant sensing. Sony and Valeo cameras provide the cutting edge in visual sensing, in addition to ultrasonic sensors from Valeo for measuring object distance.

“Nvidia DRIVE Hyperion is a complete platform for developing autonomous vehicles, which is why Sony has integrated its sensors to give our customers the most effective transition from prototype to production vehicles,” said Marius Evensen, head of automotive marketing at Sony.

DRIVE Hyperion is open and can accelerate the AV industry’s time to market by giving manufacturers the ability to leverage Nvidia’s own development work, according to the company. It includes all the tools the firm’s engineers use to record, capture and process all the data from these sensors in real time.

The entire toolset is also synchronized and calibrated precisely for 4D data collection, giving developers valuable time back in deploying safe and robust autonomous vehicles.