Researchers at North Carolina State University have developed a new method to help artificial intelligence (AI) create three-dimensional (3D) information from 2D images. Until now, 2D images have provided useful detail but they are not a direct match for the real-world environment that a camera sees. The new method and its research were recently presented at the International Conference on Computer Vision in Paris, France.

“Existing techniques for extracting 3D information from 2D images are good, but not good enough,” said Tianfu Wu, co-author of a paper on the work and an associate professor of electrical and computer engineering at the university. “Our new method, called MonoXiver, can be used in conjunction with existing techniques – and makes them significantly more accurate.”

This development could prove extremely useful for the industry because cameras are considerably cheaper than other 3D navigation hardware such as lidar.

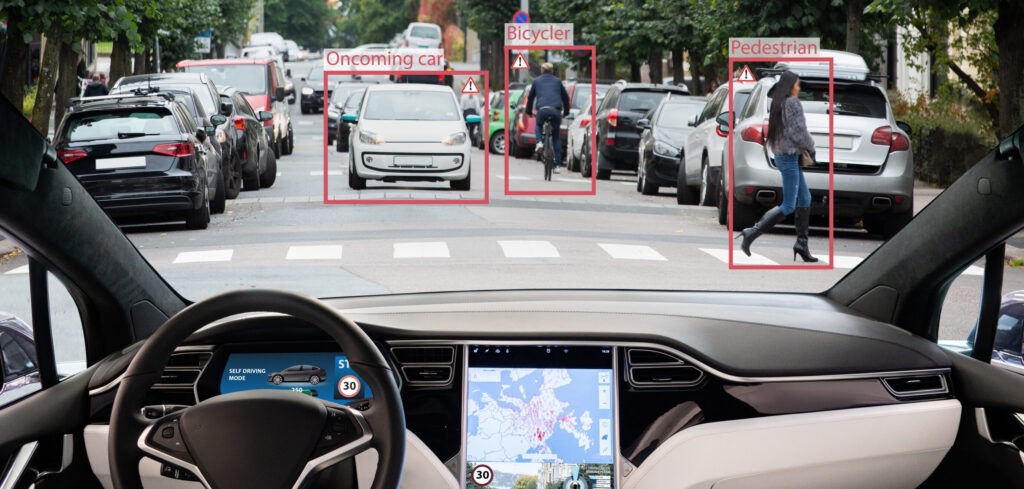

Existing techniques that extract 3D data from 2D images make use of bounding boxes. These techniques train AI to scan a 2D image and place 3D bounding boxes around objects in the 2D image, such as each car on a street. These boxes are cuboids, which have eight points and help the AI estimate the dimensions of the objects in an image and where each object is in relation to others. However, the bounding boxes of existing programs can be imperfect and often fail to include parts of a vehicle or other object that appears in a 2D image.

Wu says that the new MonoXiver method uses each bounding box as an anchor point and has the AI perform a second analysis of the area surrounding each box. This second analysis results in the program producing many additional bounding boxes surrounding the anchor.

To determine which of these secondary boxes has best captured any missing parts of the object, the AI does two comparisons. One looks at the geometry of each secondary box to see if it contains shapes that are consistent with the shapes in the anchor box. The other looks at the appearance of each box to see if it contains colors or other visual characteristics that are similar to those in the anchor box.

“One significant advance here is that MonoXiver allows us to run this top-down sampling technique, creating and analyzing the secondary bounding boxes, very efficiently,” said Wu.

To measure the accuracy of the new method, researchers tested it using two data sets of 2D images: the well-established KITTI data set and the more challenging, large-scale Waymo data set.

“We used the MonoXiver method in conjunction with MonoCon (top) and two other existing programs that are designed to extract 3D data from 2D images and MonoXiver significantly improved the performance of all three programs,” said Wu.

While you might expect this improvement to slow the processing speed, Wu says it only reduces it to around 40 frames per second compared to the 55 frames per second of MonoCon running on its own.

“We are excited about this work, and will continue to evaluate and fine-tune it for use in autonomous vehicles and other applications,” said Wu.

To read more on camera-only self-driving cars, click here.