dRISK says it has employed its edge-case retraining tool to achieve a sixfold performance improvement in time to detection of high-risk events for autonomous vehicles (AVs).

Currently, semi-autonomous and autonomous vehicles do not always detect high-risk events in time to react to them (oncoming cars peeking into the lane from behind other vehicles, vehicles running red lights concealed by other cars). However, dRISK’s tools for retraining AVs to recognize edge cases represent a dramatic step forward in the ability to retrain autonomous vehicles to outperform humans in even the trickiest driving scenarios.

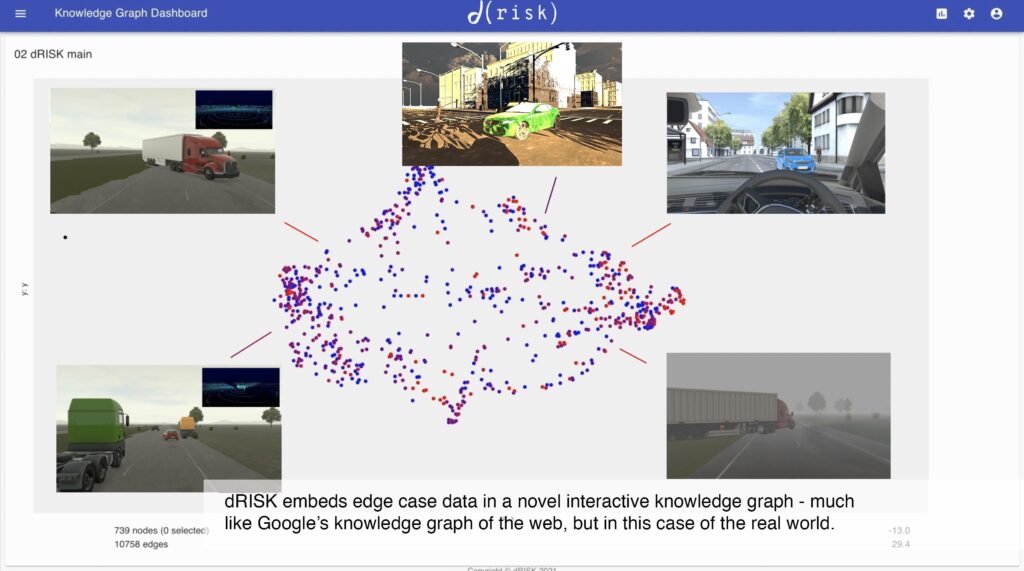

The startup’s patented knowledge graph technology (analogous to Google’s knowledge graph of the internet, but in dRISK’s case a knowledge graph of real-world events) is said to solve a number of problems that have plagued AV developers so far – encoding high-dimensional data from all the different relevant data sources into a tractable form, and then offering the full spectrum of edge cases so as to retrain not just with what has already happened but with what will happen in the future.

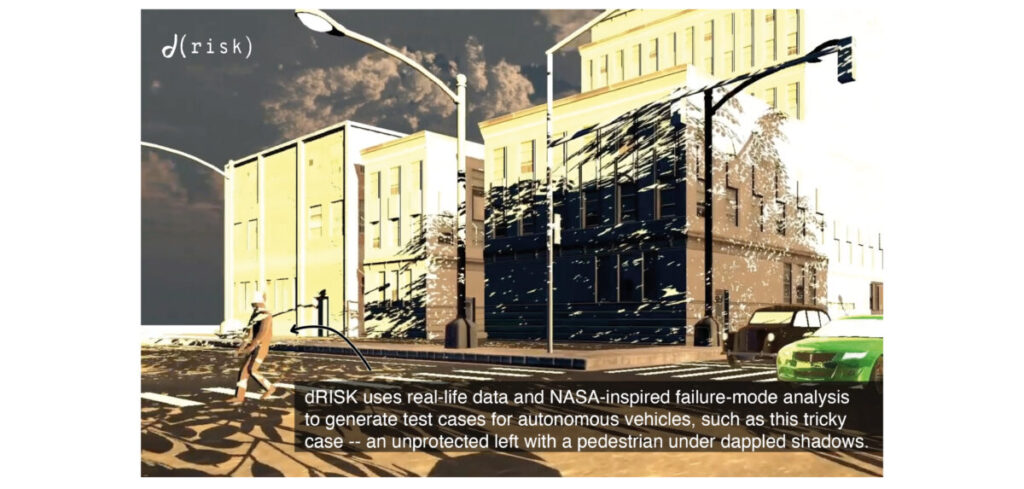

dRISK, which has offices in London, UK, and Pasadena, California, claims it delivers simulated and real+simulated edge cases in semi-randomized, impossible-to-game training and test sequences, to achieve superior testing and retraining results for customers on real-life data.

Unlike traditional training and development techniques, in which AVs are trained to recognize primarily whole entire vehicles and pedestrians under advantageous lighting conditions, with dRISK’s edge cases AVs are trained to recognize just the predictors of high-risk events (such as the headlights of an oncoming car peeking into the lane amid low-visibility). AV systems trained this way can recognize high-risk events sooner, without a significant decrease in performance on low-risk events.