MIT and Toyota researchers have designed a new model to help autonomous vehicles determine when it is safe to merge into traffic at intersections with obstructed views.

The researchers developed a model that uses its own uncertainty to estimate the risk of potential collisions or other traffic disruptions at such intersections. It weighs several critical factors, including all nearby visual obstructions, sensor noise and errors, the speed of other cars, and even the attentiveness of other drivers. Based on the measured risk, the system may advise the car to stop, pull into traffic, or nudge forward to gather more data.

“When you approach an intersection there is potential danger for collision. Cameras and other sensors require line of sight. If there are occlusions, they don’t have enough visibility to assess whether it’s likely that something is coming,” says Daniela Rus, director of the Computer Science and Artificial Intelligence Laboratory (CSAIL). “In this work, we use a predictive-control model that’s more robust to uncertainty, to help vehicles safely navigate these challenging road situations.”

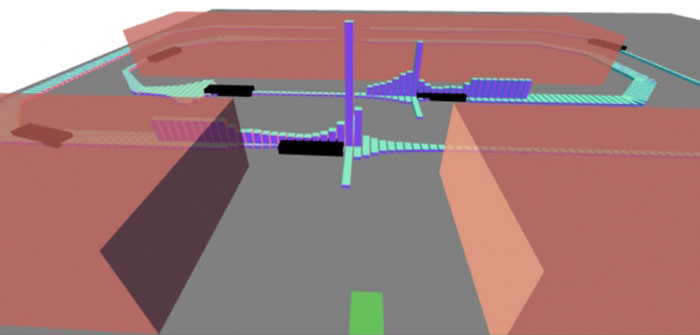

The researchers tested the system in more than 100 trials of remote-controlled cars turning left at a busy, obstructed intersection in a mock city, with other cars constantly driving through the cross street. Experiments involved fully autonomous cars and cars driven by humans but assisted by the system. In all cases, the system successfully helped the cars avoid collision between 70% and 100% of the time, depending on various factors. Other similar models implemented in the same remote-control cars sometimes failed to complete a single trial run without a collision.

The model is specifically designed for road junctions in which there is no stoplight and a car must yield before maneuvering into traffic at the cross street, such as taking a left turn through multiple lanes or roundabouts. In their work, the researchers split a road into small segments. This helps the model determine if any given segment is occupied to estimate a conditional risk of collision.

Autonomous cars are equipped with sensors that measure the speed of other cars on the road. When a sensor clocks a passing car traveling into a visible segment, the model uses that speed to predict the car’s progression through all other segments. A probabilistic “Bayesian network” also considers uncertainties — such as noisy sensors or unpredictable speed changes — to determine the likelihood that each segment is occupied by a passing car.

Because of nearby occlusions, however, this single measurement may not suffice – if a sensor can’t see a designated road segment, then the model assigns it a high likelihood of being occluded. From where the car is positioned, there’s increased risk of collision if the car pulls out quickly into traffic. This encourages the car to nudge forward to get a better view of all occluded segments. As it does so, the model lowers its uncertainty and, in turn, risk. But even if the model does everything correctly, there’s still human error, so the model also estimates the awareness of other drivers.

The model sums all risk estimates from traffic speed, occlusions, noisy sensors, and driver awareness. It also considers how long it will take the autonomous car to steer a pre-planned path through the intersection, as well as all safe stopping spots for crossing traffic. This produces a total risk estimate.

That risk estimate gets updated continuously for wherever the car is located at the intersection. In the presence of multiple occlusions, for instance, it’ll nudge forward, little by little, to reduce uncertainty. When the risk estimate is low enough, the model tells the car to drive through the intersection without stopping. Lingering in the middle of the intersection for too long, the researchers found, also increases risk of a collision.

Running the model on remote-control cars in real time indicated that it is efficient and fast enough to deploy into full-scale autonomous test cars in the near future, according to the researchers, although far more rigorous testing is required before it can be used for real-world implementation in production vehicles.

The model would serve as a supplemental risk metric that an AV system can use to better reason about driving through intersections safely. It could also potentially be implemented in certain advanced driver-assistive systems, where humans maintain shared control of the vehicle.

Next, the researchers aim to include other challenging risk factors in the model, such as the presence of pedestrians in and around the road junction.