Testing lies at the root of any innovation. In the case of autonomous vehicles, it’s crucial not only for safety, but also for ironing out kinks in development and ultimately persuading people that cars without drivers are a good idea.

However, as has already been seen, when AVs, or rather the people responsible for them, get it wrong in real-world scenarios, the effects can sometimes be devastating.

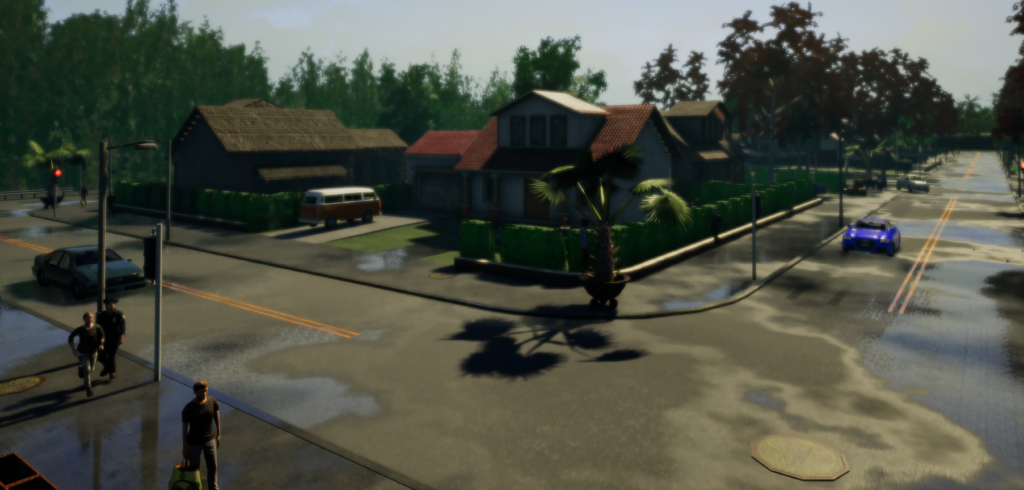

Unsurprisingly, those involved in developing AVs spend a good deal of time testing in virtual worlds. Thanks to advances in computing power and software, it’s now possible to create any kind of urban environment in which to test, plus being able to layer on a wealth of everyday scenarios involving virtual people means that nobody gets hurt.

However, building these environments requires a staggering amount of labor, data, hardware and patience. For Chris Hoyle, technical director of driving simulation company rFpro, it was a career in software programming followed by a stop in Formula 1, where he developed simulators, that led to him jumping into the front seat of this cutting-edge industry.

Hoyle began by giving away his own free software, whereas now his British company has the industry beating a path to its door. “In 2015 we got our very first customer who wanted to use our systems for the autonomous vehicle market, which was an area that we hadn’t originally anticipated for rFpro’s use,” he admits.

Building the dream

Hoyle reveals the task is becoming easier thanks to customer convergence, but full autonomy is still a long way down the road and the process is very data intensive. “We’re gradually building large libraries of scenarios, which will take years. As an example of the data involved, we’re running a system that’s using 32 GPUs and 32 CPUs for a single experiment. It’s the largest single experiment that I’m aware of. It’s producing some 500GB of data per second.”

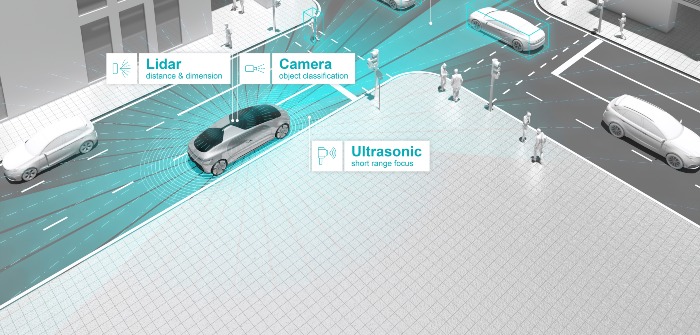

Gathering the data to create these systems requires a phenomenal amount of manpower, which still relies on good old physical human involvement. “Once we’ve decided on an area or a route, we do a very detailed survey,” Hoyle describes. “We drive the entire route with a scanning truck, measuring everything with a combination of lidar, cameras and video.

A typical day spent scanning will end up with around a petabyte of data. The lidar data we’re capturing gives us the geometry of the road and it’s super-accurate. We know where every sign is, every traffic signal, where the road markings are – all the features that are important to the vehicle sensors being simulated. Then the cameras and video fill it in with the visual information.”

“Lidar also tells us about all of the material properties of those objects. Those materials react differently from the infrared laser energy, but it is a very CPU/GPU-intensive process. We need to turn all that data into a single automatically registered contiguous map of the entire route. The next stage is the 3D reconstruction, some of which is completely automatic and some which involves manual labor. It generally depends on the objects and inorganic structures working well.”

Hoyle uses the road surface as an example of this: “It’s a very large inorganic object,” he says. “And largely planar. At the opposite end of the scale, anything such as a tree, for example, we are utterly useless at automatically processing. There’s just so much data present. For example, if you drive past a tree in the scanning truck it can change shape and with the slightest bit of wind all the leaves move. That’s just too much data, so it has to be a completely manual task. An engineer will look at the type of tree and then manually place it into the scenario.”

Ryan Robbins, senior human factors researcher at TRL (formerly known as the Transport Research Laboratory), a global organization for innovation in transport and mobility, spends his days trying to work out how cars will react by using a different ensemble of technology – namely a full-size Peugeot 3008 in its simulation suite.

Different track

“It’s the thing that makes us different from other simulators out there,” says Robbins. “It’s not just a seat with a steering wheel. It has wraparound screens because we wanted to have the most immersive experience possible, which is crucial when you’re tasked with being as close to the real world as possible.”

TRL was originally a UK government department, privatized in the 1990s. As a result, it’s been involved in just about every aspect of vehicle research in the UK. “Our simulator experience goes back to the late 1960s,” Robbins says, “but it was in the 1990s when we got our first computer graphics-based simulator setup. We’ve also recently undergone another major upgrade, which brings our simulator up to date.

“The environments we drive through are all 3D locations that have been built using lidar. That gives us really accurate point cloud data, so when we’re building our 3D model, it can be exactly like the real environment.”

Challenges of virtual testing

For all companies working with simulation, introducing people to the mix requires another level of complexity, and rFpro’s Hoyle reveals that it’s the world of video games development and VFX that has helped advance this area.

“We use what we call rigged animation, where there is actually a [digital] skeleton underneath. We’ve benefitted enormously from work done by the likes of Disney and Pixar, because if you don’t model humans realistically, and they don’t walk properly, then you get next to zero correlation.”

The biggest challenge with modeling people, however, is producing systems that are intelligent enough to predict how pedestrians and cyclists are going to behave before putting that into the virtual world.

“There are three core elements to automated driving – perception, prediction and planning,” says Rick Bourgoise, manager, Toyota Research Institute (TRI). “Perception is what the car ‘sees’; prediction is anticipating what the surrounding traffic is going to do; and planning is the decision-making process to determine how the automated vehicle will respond to the current situation. Prediction is by far one of the most challenging elements and human behavior is very difficult to simulate.”

Team players

TRI, which is focusing on application of artificial intelligence to mobility and pioneering new technologies, recently announced that it was increasing support for open-source platforms with a donation of US$100,000 to the Computer Vision Center at the Universitat Autònoma de Barcelona. Central to the donation is CARLA (Car Learning to Act) – a simulator for automated driving. “Technological advances and growth are possible through collaboration and community support,” explains Vangelis Kokkevis, director of driving simulation at TRI. “Development of a common, open simulation platform will allow TRI and our academic and industrial partners to better exchange code, data and information.”

TRI sees open-source simulation as a valuable asset in its quest for automated driving. “The benefit of CARLA is that it makes it easier to work with our academic partners, whom we like to encourage to use CARLA as an open-source simulation environment for testing their ideas,” states Bourgoise. “Open-source communities, which attract a huge amount of talent and creativity, can accelerate development of automated driving technologies. Simulation enables us to design rare, ‘edge’ case scenarios for testing that are difficult to find or create in real-world tests, even on a closed course.

“TRI collaborates with universities and organizations to accelerate research into technologies that can advance our mission. The Computer Vision Center, where CARLA is managed, is just one example of this.”

While Bourgoise enthuses about the virtual proving ground, he is also keen to point out that testing remains a combination of real-world work and simulation. “TRI’s use of CARLA supplements the other simulation work TRI is conducting,” he says. “In real-world testing, our test vehicles use several methods to establish constant, real-time situational awareness of the driving environment inside and outside the vehicle. This includes both GPS and mapping, as well as built-in sensors inside and outside the vehicle.”

End game

The continued investment of time, expertise and money from this expanding ecosystem of startups, universities, small- to medium-sized businesses, and auto manufacturers with deep pockets, is key to getting the automotive industry to its final destination – fully automated cars. “We’re going to end up over the next few years with millions of test cases. The good thing about that is that we can learn what it is going to be like to ride as a passenger inside an autonomous vehicle years before it happens,” concludes Hoyle.

By Rob Clymo