The Nardò Technical Center is one of the leading proving grounds in the world and is a go-to resource for major automotive manufacturers. Its 6.2km long handling track features a 1km straight and 16 corners of varying radius and speed. Its renowned layout, which includes crests, bumps and kerbs, makes it ideal for developing new chassis technologies. As ADAS & Autonomous Vehicle International recently reported, Porsche Engineering is now using an engineering-grade digital twin of its Nardò handling circuit to fine tune automated driving. Here, Josh Wreford, head of sales, rFpro, explains how it helped Porsche develop the digital twin, which enables engineers to assess and benchmark vehicle performance quantitively at the start of the design cycle. This includes optimising the trade-off between motor type and size against battery sizing to meet range and acceleration targets. The digital twin also facilitates driver-in-the-loop simulations for additional subjective assessments, such as ride and handling.

The Nardò Technical Center is one of the leading proving grounds in the world and is a go-to resource for major automotive manufacturers. Its 6.2km long handling track features a 1km straight and 16 corners of varying radius and speed. Its renowned layout, which includes crests, bumps and kerbs, makes it ideal for developing new chassis technologies. As ADAS & Autonomous Vehicle International recently reported, Porsche Engineering is now using an engineering-grade digital twin of its Nardò handling circuit to fine tune automated driving. Here, Josh Wreford, head of sales, rFpro, explains how it helped Porsche develop the digital twin, which enables engineers to assess and benchmark vehicle performance quantitively at the start of the design cycle. This includes optimising the trade-off between motor type and size against battery sizing to meet range and acceleration targets. The digital twin also facilitates driver-in-the-loop simulations for additional subjective assessments, such as ride and handling.

When did the project first begin and what was the brief?

The project began in 2020 when the initial lidar scans of the handling track were completed. Virtual validation and simulation are now crucial elements of vehicle development but the key is to ensure that your simulations are correlating with the real world. The best way to do this is to carry out real-world validation tests in the same place you conduct your simulations and a digital twin enables you to do this.

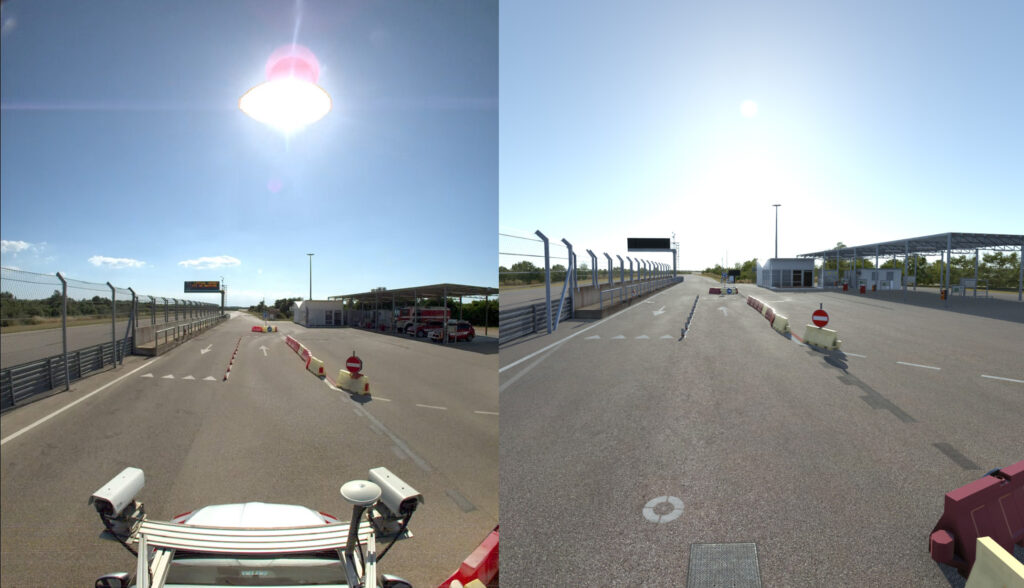

When creating a digital model we need to collect two sets of data. The lidar point cloud data provides the positional information of everything in the scene and then we use cameras to recreate the visuals of the environment.

How did you first map the circuit – how long did it take?

At rFpro, we use a specialist lidar scanning company to create the point cloud data for our digital twins. They use highly accurate phase-based laser scanning equipment. Collecting this data for a digital twin can take a matter of days depending on the size of the environment. We then turn this raw data into a usable model, which involves identifying and characterizing all the objects in the scene and then our art team ensures it is highly realistic visually too.

What are some of its most interesting features and how are they useful in helping fine-tune vehicle dynamics, particularly for automated driving?

The dry handling circuit provides a technical challenge to vehicles in terms of chassis dynamics and tyre testing, with carefully designed combinations of specific radius curves and straights. When assessing automated driving systems, presenting a vehicle with these demanding corner sequences can be very challenging. It reveals how an AV will respond in such an environment and may destabilise it or force it to decelerate for sharper turns.

Automated driving on test tracks like Nardo poses a significant challenge as they often don’t contain the same visual cues that you get on a road. So, it can really challenge the systems to ensure they can navigate anywhere.

How does it facilitate driver-in-the-loop testing – and is it simulator agnostic or do you have to use a certain set up?

rFpro’s digital twin replicates the subtleties of the real track, not only with a high-fidelity visual environment but also a high-density engineering-grade road surface model, accurate to 1mm in the Z axis between adjacent points. The road surface data can be passed to a wide variety of tyre or vehicle software models using a variety of different methods, including our native Terrain Server. This ensures that the model under test receives precisely the same inputs that a real car does when on the track. While rFpro’s digital twins are designed to function solely with rFpro software, rFpro itself has been designed specifically to be highly adaptable and can be integrated with virtually any simulator hardware, from a basic USB wheel at a desk to the latest multi-DOF full-motion simulators.

Can you share any customer feedback or examples of how it has helped to fine-tune performance?

Unfortunately, a lot of the work we do for OEMs is strictly confidential, so we are unable to go into specific feedback. However, ride has become a crucial area of development for autonomous vehicles. As a user, we need to feel comfortable and safe in the vehicle, otherwise we won’t use it again. How the vehicle rides and how it navigates twists and turns, particularly at speed, is really important. How a car makes you feel is entirely subjective of course, so introducing a human into the development loop early into the process using simulation is crucial before investing resources into the AI’s algorithm or chassis hardware.

What will you be showing at the upcoming show in California to help improve automated driving?

We will be showcasing our new ray tracing rendering engine at ADAS & Autonomous Vehicle Technology Expo California (September 20 & 21, 2023, Santa Clara). We believe it is the first to accurately simulate how a vehicle’s sensor system perceives the world. It uses multiple light rays through the scene to accurately capture all the nuances of the real world. As a multi-path technique, it can reliably simulate the huge number of reflections that happen around a sensor. This is critical for low-light scenarios or environments where there are multiple light sources to accurately portray reflections and shadows. It significantly reduces the industry’s dependence on real-world data collection by providing highly-accurate synthetic training data.

Read more on the digital twin of the Nardò handling circuit, here.