Robust driver monitoring is coming under ever sharper scrutiny as more capable assistance features reach the market – and the most advanced systems could offer advantages beyond occupant safety, writes Alex Grant, in the January 2024 issue of ADAS & Autonomous Vehicle International.

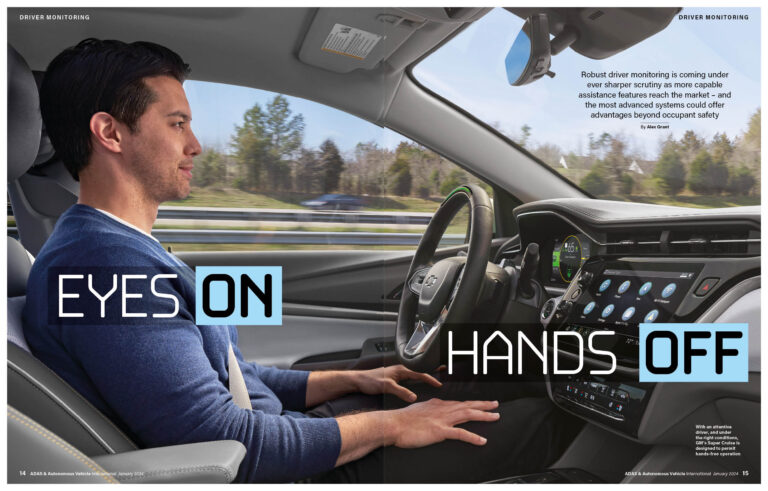

Within the development arc of autonomous vehicles, the last two years might well be remembered for making driving more cooperative. Toyota, Ford and BMW have joined General Motors in adding ‘hands-off, eyes-on’ Level 2 highway assistance to new models, while Mercedes-Benz offers conditional Level 3 features in selected markets. It’s a step forward, but one that risks blurring the lines for end users – drivers still have to be attentive, and monitoring this effectively is crucial.

Of course, distractions are also a challenge for manual driving, and regulators are focusing on driver monitoring systems (DMS) as a solution. Governments in China and the USA have signalled plans to research and mandate their use in future vehicles, and the European Union’s General Safety Regulation 2 (GSR2) has already done so. A DMS will be mandatory in type approvals in Europe from July 2024 and in all new registrations two years later, and their importance in autonomous vehicle development is cited in the regulation itself.

Today’s systems fall into two categories, either monitoring attention indirectly (typically based on steering inputs) or directly (with a camera tracking eye gaze and/or head posture); testing by AAA suggests the latter is much more effective. On a California highway, the organization’s engineers (with a safety spotter on board) examined three hands-off driving scenarios: staring into their laps, looking at the center console, and a third giving free rein to trick the system. Vehicles were timed to see how long they took to issue warnings or take other action.

In the first two scenarios, camera-based systems from Cadillac and Subaru alerted drivers after four to eight seconds, compared with between 38 and 79 seconds for indirect monitoring technology from Tesla and Hyundai. Although direct systems performed better in all scenarios, both could be tricked by engineers and neither resulted in the assistance feature being disabled after multiple warnings. Matthew Lum, senior automotive engineer at AAA, says this is a particular challenge when drivers delegate more of the task to the vehicle.

“We haven’t conducted direct research [looking at] driver distraction when using these systems. But, based on human psychology, we are very poor at periodically observing a process which is – for lack of a better term – largely automated,” he explains.

“That would lead me to believe that driver distraction is probably something that occurs more often when using these systems. Not that drivers will intentionally misuse them, it’s just somebody getting lulled into a false sense of security. [That] is why robust driver monitoring is very important.”

Public confusion

Consumer awareness is an added challenge. In 2022, the Insurance Institute of Highway Safety (IIHS) surveyed 600 drivers, split equally between regular users of Cadillac, Tesla and Nissan (and Infiniti) assistance features. Among them, 53%, 42% and 12% respectively treated their vehicles as fully self-driving, and there was an increased tendency to eat, drink or send text messages while the systems were active. Almost half of Tesla AutoPilot and GM Super Cruise users had experienced a temporary suspension of assisted driving features having repeatedly ignored warnings.

IIHS introduced new DMS ratings last year, awarding systems with gaze and head posture monitoring and multiple alerts, and that do not pull away from a full stop if the driver isn’t paying attention. David Zuby, the organization’s executive vice president and chief research officer, adds that even the most advanced systems have limitations.

“Most Level 2-type features don’t have adequate monitoring of drivers’ attention,” he explains. “Only a few companies are using driver-monitoring cameras to detect whether the driver is looking at the road ahead. Many systems use only hands-on-wheel detection. Among camera monitors, some are able to detect where the eyes are looking while others can detect only which direction the face is pointing. A problem with the latter is that the eyes may be looking somewhere – for example, at a mobile device – different from where the nose is pointing. So far, no implementations of camera monitors look to see whether the hands are free to take the wheel if needed – i.e. not occupied with a burger and a Big Gulp.”

Euro NCAP has similar concerns and added direct driver monitoring to its ratings system (shared with ANCAP) in January 2023, noting that the technology has become mature and accurate enough to be effective. Impairment and cognitive distractions will be added to the ratings from 2026, and technical director Richard Schram says it’s important that these systems be designed with the driver experience in mind.

“We don’t want the system constantly telling you that you’re not paying attention, [because] that will really reduce acceptance of the system,” he explains. “We are pushing for clever systems. Ideally, the car knows you

are distracted [but] if there’s nothing critical happening in front of you then it isn’t really a problem per se. If you look away for half a second, the car might say, ‘Oh, there’s more pressure on me because the driver isn’t seeing anything’. That’s the interaction we want.”

Full cabin monitoring

Camera-based systems are developing quickly. Brian Brackenbury, director of product line development at Gentex Corporation, says the automotive industry is benefitting from innovations from the cellphone industry and considering what can be added on top.

Sensors mounted near the rearview mirror are less prone to occlusions than those on the steering column,

he explains, while near-infrared technology can track gaze through sunglasses, and higher-resolution cameras could monitor the entire cabin. This would provide opportunities for additional safety features, such as enabling vehicles to pre-tension seatbelts and prime airbags more accurately before a collision, as well as offering new features for customers.

“Not only are [the latest cameras] higher resolution, they’re a combination of infrared and color. We can start doing things like selfie cam [and] video telephony and start using that camera for more than just regulatory stuff to bring value to the end consumer – we see that as a big step,” Brackenbury comments.

“We’re also really excited about 3D depth perception. Once you’ve already got [our] camera pointed in the cabin, you can add structured light, [and] the camera is actually looking at those [invisible, near-infrared] dots in the field. We can model the occupants in the vehicle in the 3D world as a means to better enhance secure safety systems. [This means we can] more accurately identify if they truly are holding onto the steering wheel, or maybe they’re floating over it, [whereas] 2D can still get a little confused.”

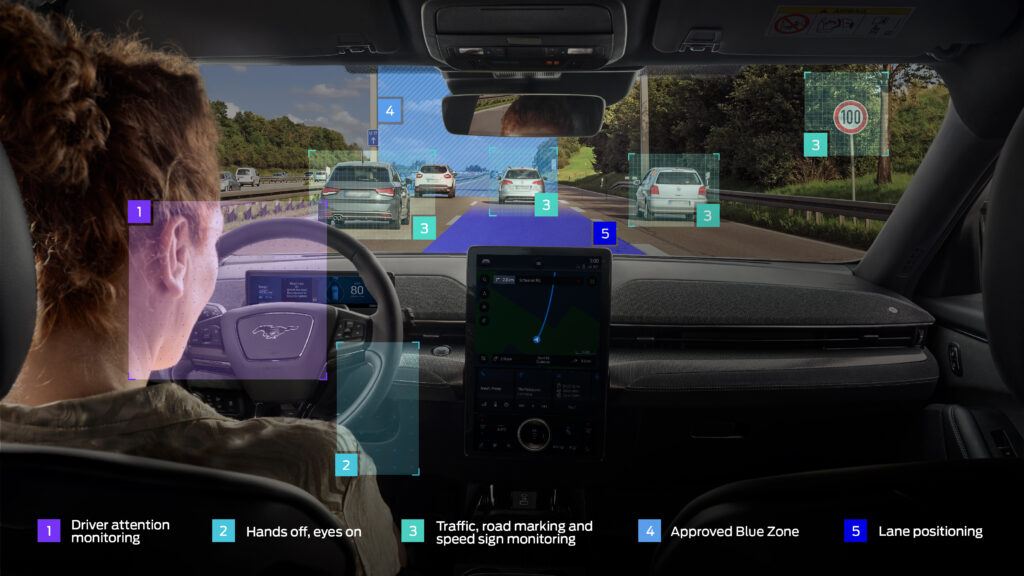

Paul McGlone, CEO of Seeing Machines, is similarly optimistic about the potential for full cabin monitoring. The company has recently partnered with Magna to integrate a DMS into a rearview mirror, which offers a suitable viewpoint, and further developed its algorithm to support more features – for example, closer interactions between the DMS and ADAS functions and enabling smarter, AI-driven, digital assistants.

“As driver adoption increases over time, accelerated by regulatory tailwinds, the optical and processing systems put in place can then be harnessed to offer a range of value-adding features to the manufacturer, the driver and indeed passengers,” he explains.

“This includes features based around convenience, such as simulating augmented reality using eye position, comfort (for example, adapting the cockpit to the driver’s profile) and also extending the safety of other occupants in the vehicle. Moreover, over-the-air updates mean these features can be sold to models already on the road.”

Cabin monitoring is also a development focus for Smart Eye, and company CEO Martin Krantz notes a significantly higher level of complexity involved. While a DMS would typically have 20 features, cabin monitoring requires higher-resolution cameras with a wider field of vision, supporting up to 100 features – including the state of occupants, forgotten object detection and even the position of child seats.

For suppliers, those next stages include increased need to understand and interpret human physiology – such as intoxication, drowsiness and ill health – and the automotive industry is benefiting from research in other areas. Smart Eye technology is supporting studies of human behaviors for qualitative studies, and monitoring responses to entertainment and advertising.

Krantz says the company is already working with OEMs and Tier 1s to explore applications in future vehicles. “As new regulation requires vehicle manufacturers to adopt DMS, technology that monitors the state of the driver will become as common as safety belts and airbags in cars. The next step is for the software to understand drivers and passengers on a deeper level. By tracking the facial expressions, gaze direction and gestures of each person in a car, you gain another layer of insight into what they are doing and feeling. This enables much more advanced user interfaces than what we have today,” he explains.

“The smooth and seamless human-machine interaction (HMI) enabled by this technology will be essential in future cars. DMS is already improving road safety in millions of cars on the road, allowing us to explore what this technology could mean for advanced HMI down the line. That’s the next level.”

This feature was first published in the January 2024 issue of ADAS & Autonomous Vehicle International magazine – you can find our full magazine archive, here. All magazines are free to read. Read the article in its original format here.