In the autonomous vehicle era, cross-industry collaboration has become a common practice as developers continue to find new ways of leveraging tools and expertise from other sectors. A prime example of this is the auto industry’s use of games engines in simulation and driverless car training, with OEMs including Ford, General Motors and Toyota among those that have invested heavily in solutions that utilize the tech. But as realistic as environments such as Gran Turismo and Forza may seem to the uneducated eye, can gaming platforms genuinely be used to train self-driving vehicles, and what are the difficulties of converging technologies from the two fields for the purpose of AV engineering?

“I believe it is fair to say that the gaming world has provided good base platforms, but moving from a game engine to a proper AV simulator is far from a trivial task and takes a large investment both in terms of manpower and funding,” says German Ros, a researcher at CARLA (Car Learning to Act), an open-source simulator for AV driving research based in California.

Ros says that gaming engines such as Unreal Engine 4 (UE4) and Unity3D have evolved from being simply for gaming to becoming tools to develop not just AVs but a range of simulation environments.

“State-of-the-art game engines such as UE4 provide a good starting point to build simulators that require good camera-rendering capabilities, management of large-scale scenes and integration with a physics engine,” he explains. “At CARLA we took UE4 as our starting point and built on top of it, creating a tailored autonomous driving simulator with support for traffic simulation, sensor rendering and ingestion of large maps, and a flexible API to give users control over the entire simulation.”

UK company Oxbotica says that using a gaming engine in its AV simulation platform ensures it has a robust, fast, extendable and well-supported codebase with a vast array of essential world builders. The techniques behind animation technology used in the development of blockbuster movies are also being harnessed by Oxbotica, while a video game engine, not dissimilar to those behind Fortnite and Call of Duty, is helping run the company’s virtual test program.

“Simulation technology is one of the key enablers in the development of autonomous vehicles, as it allows engineers to run virtual tests in a near infinite number of different scenarios with varying external factors such as environment or traffic, while additionally modeling unexpected or dangerous scenarios that can’t easily be recreated in the real world,” comments Todd Gibbs, Oxbotica’s head of simulation development, who was the first gaming expert to be employed by the supplier, having previously headed technology development at NaturalMotion, the creator of smartphone application CSR Racing.

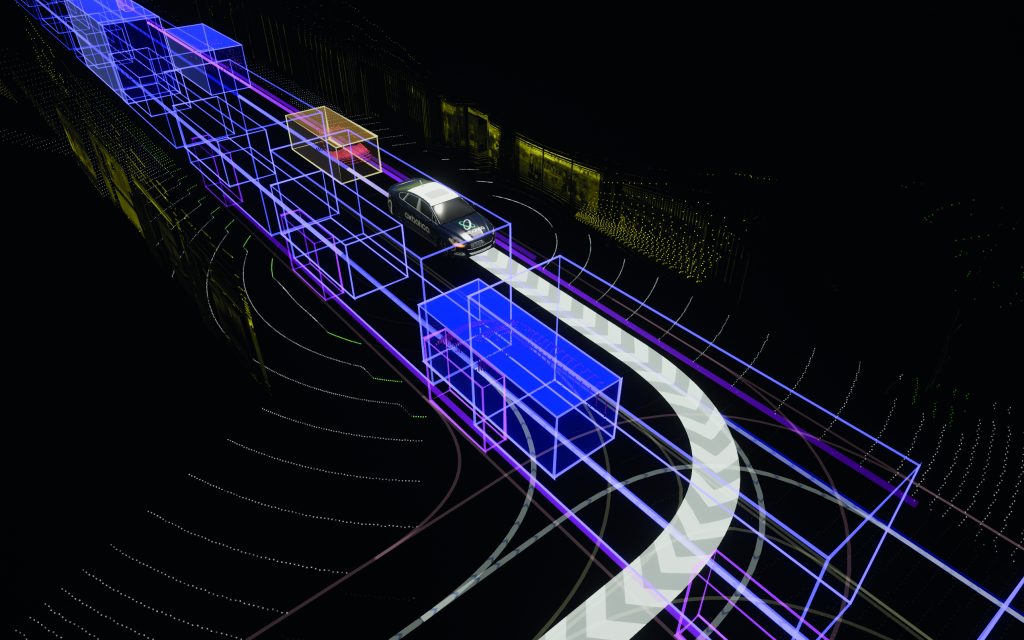

Building those scenarios can involve an element of trickery. Ros says that one needs to effectively “fool” the AI agent into believing that the virtual world in which it operates is in fact the real world. That means creating realistic behavior of vehicles and pedestrians, dynamics and kinematics for vehicles, and simulation of a multitude of sensors such as high-definition cameras, radars and lidars.

Oxbotica recently developed a technique coined ‘deepfake’ technology, which is capable of generating thousands of photo-realistic images in minutes. “This helps us to expose our autonomous vehicles to a vast number of variations in the same situation,” explains Gibbs, “without real-world testing of a location ever having taken place. This deepfake technology is a great example of how we use virtual reality to develop our AV software as it allows us to use these synthetic images to teach our software and learn from it.”

Merging the physical world and the virtual realm of gaming is no easy task, though, and is particularly challenging when it comes to ensuring that the vehicle can make critical decisions, says Nand Kochhar, vice president of automotive and transportation industry strategy at Siemens Digital Industries Software.

“It’s not just the graphics element that’s important. The simulation also has to have a meaning because the vehicle is making decisions – most of the time, critical, safety-related decisions,” he says. “You need to have the real-world data and that’s one of the key differentiators between the simulated world for vehicle development and in gaming. So the question is, how do you converge this base data with the other knowledge, physics and decision-making?”.

Achieving that requires a mixture of technologies, according to Kochhar. First, one needs to build up a perception using hardware such as cameras and radar, which monitor their surroundings. Then that needs to be overlaid with the vehicle behaviors, in a process called Chip to City. Finally, one needs the control mechanisms that process all the information and make a decision in the physical world, such as steering or undertaking a braking maneuver.

Collating all the data required to feed the scenario creation in simulation is a colossal task in itself and beyond the computational power level of any gaming platform available today, according to Ros. However, once one has the initial data, Kochhar says, it can be fed into the simulation software and then the neural network or artificial intelligence can be created to assemble the virtual environment and then identify the millions of potential scenarios.

Another method of training AVs being adopted by industry professionals is via mixed reality technology, which combines both the virtual and the real worlds. Virtual reality headset tech was conceptualized in gaming, but has since evolved to become an R&D and design tool in numerous industries. Finnish company Varjo, a specialist in augmented reality tech, has engineered a headset featuring an optical camera that can overlay virtual elements onto the real world.

Swedish auto maker Volvo has been working with the supplier on advancing and using the world’s first mixed reality application in vehicle development (see ATTI, September 2019, p23). In principle, engineers are able to drive a real car while wearing the specially developed mixed reality headset, seamlessly adding virtual elements or complete features such as a pedestrian or animal crossing the road that seem real to both the driver and the vehicle’s sensor systems, for both development and training purposes.

In such a way, a vehicle’s active safety systems and autonomous driving technology can be tested in a safe but real environment, such as on a test track, and more cost-effectively due to not having to invest in expensive dummy objects or other equipment.

“We can decide what will be real and what will be virtual,” notes Niko Eiden, co-founder and CEO of Varjo. “The operator will see the object [through the headset], the car will take the correct action and, if necessary, give a warning signal. Inflated dummy obstacles are therefore no longer required.”

As virtual analysis typically only works on top of a layer of real-world data, Varjo’s solution brings these two elements together, providing both the real-world and virtual test data at the same time.

In the long run, according to industry experts, the reality is that simulation will work hand-in-hand with physical testing, with the latter able to provide the raw data needed to feed the immense computing power of the simulations.

“It’s not going to replace real road testing, but the more kilometers you do in the real world, the more data you have, and you can put that back into the simulator system. Then you can use a computer to replicate the endless number of potential scenarios,” concludes Eiden.