Yoav Hollander, founder and CTO of Foretellix, tells us why the industry needs better solutions if it wants to achieve production-ready AVs that can deal with all possible situations in a safe and responsible way, and why a common language to define test scenarios is an important part of that. He will be going into more detail during his presentation at the Autonomous Vehicle Test & Development Symposium. Purchase your delegate pass here.

Tell us more about your presentation.

One of the main evolutions that I’ve observed in the AV industry over the last couple of years is that many companies have got to the point where they can make the fundamentals of AVs work just fine – sensing, planning routes, controlling the vehicle. The really hard part, though, is making them safe enough in all of the edge cases.

One of the main evolutions that I’ve observed in the AV industry over the last couple of years is that many companies have got to the point where they can make the fundamentals of AVs work just fine – sensing, planning routes, controlling the vehicle. The really hard part, though, is making them safe enough in all of the edge cases.

The result is that the industry has had to adjust its projections of when production-ready AVs will hit the road. For this final stretch of development to go well, we feel that there needs to be a way for the OEMs, as well as regulators and the public, to measure how competently and how safely AVs handle certain situations. It’s not enough to throw about promises and technology specs; the industry needs to be able to quantify how well an AV does in the rain, in a certain city, with a specific kind of junction and with local driving styles. This kind of detail is important, and it’s where ‘scenarios’ come in.

So how do you quantify AV performance and testing procedures?

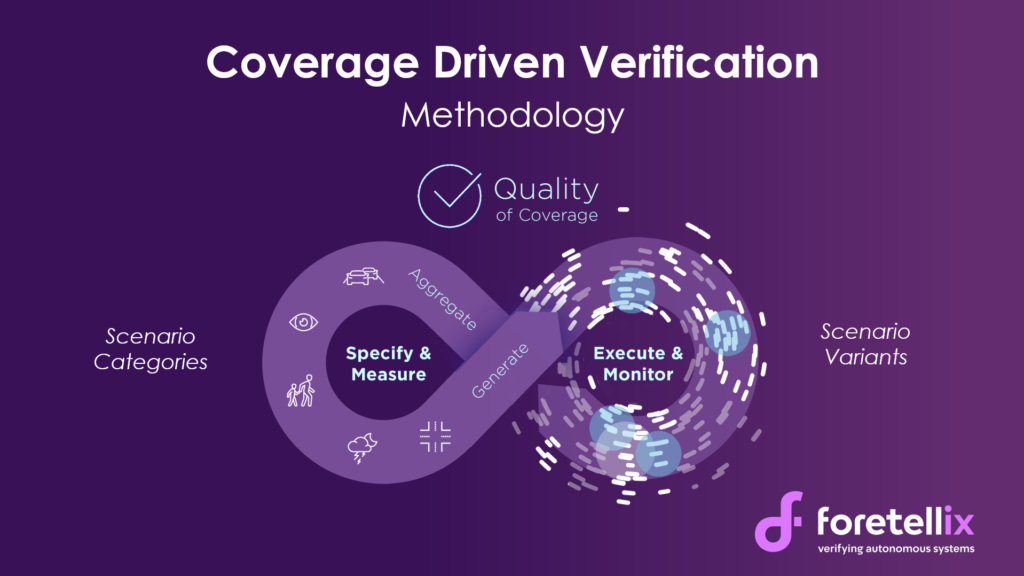

You can define AV testing and the results of those tests using what we call scenarios. For example, there would be a scenario called ‘cut in’, where one vehicle cuts off the car being tested. There would be many of these scenarios, each with a number of parameters that can be varied to test the scenario in all conditions, with every possible permutation of the different variables of such situations and with combinations of those scenarios.

We have developed a language to write these scenarios, as well as a tool that interprets the language and collects all the information. It also displays it in various ways to give an overview of how many times a scenario has been run – either in simulation or in real-world testing – as well as the results. In other words, it gives you an aggregate coverage picture for all the scenarios that were exercised. The tool is called Foretify and the first customers are starting to use it.

We’re trying to open up this language we’ve developed, and to help make it an industry standard we’ve decided to put it in the public domain. We’re currently working with quite a few organizations to get feedback about it before we open it up to the public. One of those organizations is ASAM (Association for Standardization of Automation and Measuring Systems), which is looking into new ways to define scenarios.

The obvious users of scenarios are OEMs, Tier 1s, regulators and certification agencies. But there are many others who would benefit from such a system: insurance companies, smaller entities of government, and even the public. For such a diverse group of potential users, you ideally want to have a common language and a software and hardware setup that is compatible for all of them, so that the meaning and outcome of independent tests will be comparable.

The obvious users of scenarios are OEMs, Tier 1s, regulators and certification agencies. But there are many others who would benefit from such a system: insurance companies, smaller entities of government, and even the public. For such a diverse group of potential users, you ideally want to have a common language and a software and hardware setup that is compatible for all of them, so that the meaning and outcome of independent tests will be comparable.

Furthermore, AV companies – in particular OEMs – will have multiple working groups within the company. For example, the group that does simulation testing needs to be able to communicate and hand over work efficiently to the group that does real-world testing.

Once you have this universal language, you need tools that implement it by translating the behaviors, parameters and constraints to instructions for software and hardware to enact a scenario either in simulation or in available real-world conditions.

What does the future hold for AV development?

AVs are a new field, and no one quite knows how to verify all the edge cases, but at the same time, companies are afraid that their AV will make the news in a high-profile accident. As a result, I think that we will see more and more resources, people and ingenuity devoted to finding all those edge cases, making sure the vehicles know how to deal with them.

Because this is so important, the other thing that might happen is the pooling of resources, where the various companies and organizations will try to create common scenario libraries, or to donate their scenario libraries so that everybody can test in the same circumstances.

If testing scenarios and methodologies will be shared in the future – both within a company and between organizations – then having a common, human-understandable language will enable that. This will enable scenarios to be portable across various tools, simulators and physical driving cars, and across different cities and geographies.

As it stands, there are a lot of initiatives working on AV validation, but it’s all a bit disjointed. One of the things we’re aiming for is to bring initiatives together and to ensure that tools aren’t standalone – that thanks this common language, they can work together.

Catch Yoav’s presentation ‘Using portable scenarios and coverage metrics to ensure AV safety’ at the Autonomous Vehicle Test & Development Symposium, which is held at the Autonomous Vehicle Technology Expo in Stuttgart on May 21-23. For more information about the Expo and Conference, as well as full conference programs, head to the event website. Expo entry is free of charge, rates apply for conference passes, which give access to all three conferences, the Test & Development, Software & AI and Interior Design & Technology Symposium.

Catch Yoav’s presentation ‘Using portable scenarios and coverage metrics to ensure AV safety’ at the Autonomous Vehicle Test & Development Symposium, which is held at the Autonomous Vehicle Technology Expo in Stuttgart on May 21-23. For more information about the Expo and Conference, as well as full conference programs, head to the event website. Expo entry is free of charge, rates apply for conference passes, which give access to all three conferences, the Test & Development, Software & AI and Interior Design & Technology Symposium.