Assaf Mushinsky, chief scientist and co-founder of Brodmann17, sheds some light on the recent advances in AI and deep learning, and the ones needed to make the most of self-driving hardware in order to take the AV industry to the next level. He will go in more depth at the Autonomous Vehicle Software & AI Symposium, on May 21-23, in Stuttgart.

Tell us more about your presentation.

Perception, whether camera- or lidar-based, is the biggest challenge in AVs. Solving this problem in the most efficient way possible without compromising the accuracy is the only way to take this technology from premium vehicles to the mass market.

Perception, whether camera- or lidar-based, is the biggest challenge in AVs. Solving this problem in the most efficient way possible without compromising the accuracy is the only way to take this technology from premium vehicles to the mass market.

I will therefore review the deep learning software stack, including frameworks, inference engines and primitive libraries, their advantages and disadvantages, and how to select the right one for your solution. Finally, I will present algorithmic optimization methods for improving inference speed, including Brodmann17’s unique technology which offers order of magnitude computation reducing while providing state-of-the-art accuracy.

How will improvements in deep learning algorithms enable the mass production of AVs?

Deep learning has seen a huge improvement in the accuracy of perception tasks – crucial for autonomous driving. This leap in accuracy was achieved thanks to the availability of large quantities of data and vast computing power.

While the first generation of AI mainly focused on improving accuracy, it is now becoming clear that meeting higher computational requirements is the key to mass producing autonomous vehicles. There’s been a huge effort recently to improve hardware, software and especially algorithms so they can be used in real-world products with real-world pricing.

How will the industry drive down the cost for AVs?

In order to achieve substantial improvements in cost and efficiency and make the mass production of AVs possible, the efficiency of the entire stack must be improved. Time and talent must be invested into the non-trivial algorithms that truly hold the potential for a change of an order of magnitude (or more) in power and accuracy – something that cannot be achieved by hardware progress alone.

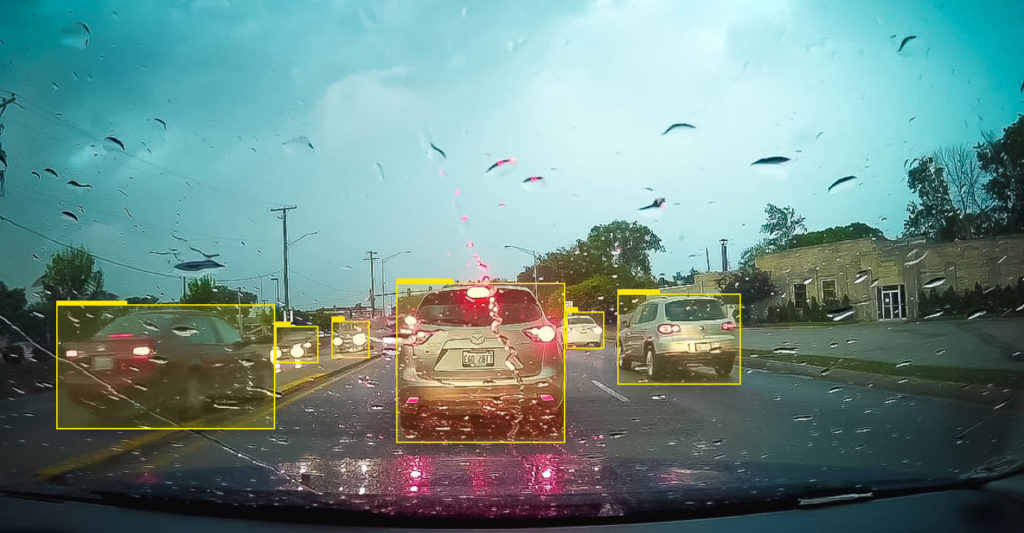

The challenges we are facing will only ramp up as we progress, because we will want to detect objects at ever-longer distances, higher resolutions, better accuracy and in all conditions – regardless of weather and lighting. Better software and better algorithms can help us extract more AI from any processor. This will enable more scalable solutions on a bigger range of hardware with different price tags.

What are the major challenges with deep learning?

Deep learning is new and still extremely challenging. Most deep learning solutions have a hard time coping with the gap that exists between accuracy demands and the available computation power. The benefit of using efficient code is limited and network optimization most often comes at the cost of accuracy.

We are solving these issues by leveraging our deep learning technology, which we will present at the conference. This technology enables us to produce perception software that can run on everyday devices without compromising on accuracy. Additionally, finding the best models takes a lot of time and expertise. To augment our talented team of researchers we’ve developed our own semi-automatic training platform that can support thousands of simultaneous experiments. Our semi-automatic architecture search enables us to train the most optimal network for a specific task and hardware with minimal effort and cost and within a tight timeframe.

Assaf will present ‘Real-time deep learning for ADAS and autonomous vehicles’ at the Autonomous Vehicle Software & AI Symposium, which is held during Autonomous Vehicle Technology Expo in Stuttgart on May 21-23. For more information about the Expo and Conference, as well as full conference programs, head to the event website. Expo entry is free of charge, but rates apply for conference passes, which provide access to three symposiums: Test & Development, Software & AI and Interior Design & Technology.

Assaf will present ‘Real-time deep learning for ADAS and autonomous vehicles’ at the Autonomous Vehicle Software & AI Symposium, which is held during Autonomous Vehicle Technology Expo in Stuttgart on May 21-23. For more information about the Expo and Conference, as well as full conference programs, head to the event website. Expo entry is free of charge, but rates apply for conference passes, which provide access to three symposiums: Test & Development, Software & AI and Interior Design & Technology.