Claytex, the international consultancy specializing in modeling and simulation, has been developing a new generation of sensor models to enable autonomous vehicles to more accurately perceive the road ahead and identify obstacles to prevent accidents.

Identifying and analyzing the immediate environment, something human drivers do automatically, is critical to the successful use of artificial intelligence in autonomous vehicles. Therefore more accurate modeling of radar, lidar and ultrasound sensors in simulation will enable better validation before use on public roads.

“We are initially developing a suite of generic, ideal sensor models for radar, lidar and ultrasound sensors, using software from rFpro, with a more extensive library to follow,” said Mike Dempsey, managing director, Claytex.

“rFpro has developed solutions for a number of technical limitations that have constrained sensor modeling until now, including new approaches to rendering, beam divergence, sensor motion and camera lens distortion.”

One of the biggest challenges of AV development is ensuring that the vehicle will respond identically on physical roads as it did in virtual testing. Sensor models used to test an AV must accurately reproduce the signals communicated by real sensors in real situations.

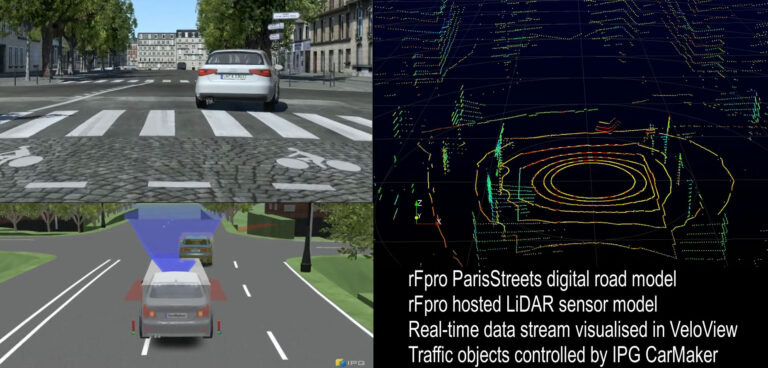

rFpro’s simulation software renders images using physical modeling to improve the validation of AV testing, as they are suitable for use with machine vision systems that must be fed sensor data that correlates closely with the real world.

rFpro can adjust the lighting conditions of the simulation to match the angle and brightness of the sun at different times of day or different latitudes, reflections from a wet road surface and all weather types including snow and heavy rain.

Claytex has found camera modeling to be very powerful in rFpro because the software supports different lens calibration models, allowing each customer to be supported without requiring them to change their preferred calibration methods.

This includes calibration for lens distortion, chromatic aberration and support for wide angle cameras with up to a 360° field of view.

“The artificial intelligence (AI) in an AV learns by experience, so must be exposed to many thousands of possible scenarios in order to develop the correct responses. It would be unsafe and impractical to achieve this only through physical testing at a proving ground because of the timescales required,” explained Dempsey.

“Instead a process of virtual testing in a simulated environment offers the scope to test many more interactions, more quickly and repeatably, before an AV is used on the public highway.”