Sama, a provider of computer vision solutions for ADAS and autonomous vehicles, has unveiled a scalable solution for the annotation of medium and long sequences of frames essential for automotive AI models.

The new solution is designed to reduce total cost of ownership of complex automotive data for four of the top five automotive OEMs and its Tier 1 suppliers, the company says.

Sama has used this method with medium-length sequences up to hundreds of miles long, and is in the process of scaling to even longer sequences.

Challenges and solutions

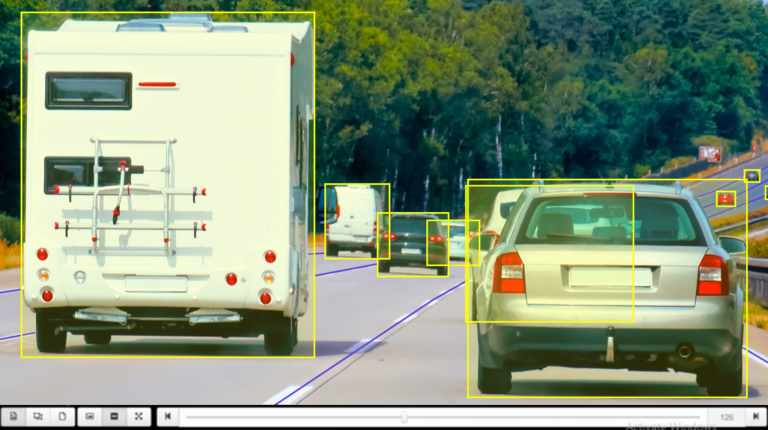

Annotating complex sequences involves balancing numerous factors, including the number of frames and the consistency of the work done by multiple annotators. Inconsistencies can lead to reworking and delays, increasing costs and risks. High-quality annotated data is important to minimize model failure risks, which can cause delays, escalate costs and pose safety risks in live environments.

Sama says it can address these challenges by integrating human feedback with proprietary algorithms to enhance sensor input and quickly identify biases or problems. The company selects and trains teams with specific instructions and calibrations for each client before starting any project.

Quality control and performance

Throughout the project, multiple quality control steps were implemented to ensure consistent annotations and a representative data set for various driving scenarios.

The company says the approach is critical for training models on diverse data reflecting different weather conditions, vehicle types and pedestrian behaviors. For example, a driver-assist model must accurately alert drivers if a car or pedestrian is too close when parking or backing up.

Sama says that for a complex 3D workflow for a Tier 1 supplier, the platform delivered 10 million shapes per day with a 99% quality rate for both 3D dynamic and static objects, annotating 35 million frames in eight months.