Three full days, 150+ exhibitors, 120+ conference speakers and thousands of visitors from across the European vehicle safety, validation and autonomous driving sector – ADAS & Autonomous Vehicle Technology Expo Europe 2023, which was held in Germany last week (June 13-15), has once again proved to be the industry’s leading showcase event.

“The ADAS & Autonomous Vehicle Technology Expo in Stuttgart is one of the biggest conferences and exhibitions focusing on ADAS/AD professionals that I am aware of,” said Ralph Grewe, perception product manager of the driverless innovation line at Continental. “It’s a great event to get an overview of the current trends and products relevant for ADAS/AD development, and a must-have in your calendar.”

“We wanted to see some of the latest technology available from suppliers, to understand areas where currently we’re not up to standard, and get a better overall understanding of the industry,” commented Oliver King, an ADAS development engineer at Nissan. “We’ve come in search of some key topics around connected vehicles and how to evaluate them, as well as data management, as we’re seeing vehicles with a lot more sensors now, and in turn, we have a lot more data to manage and analyze. [The show] has been really good, really interesting. There’s lots of interesting technology – and helpful people to explain it all to us.”

“It’s been amazing to see the AV industry working together and collaborating at the event,” added Camilla Fowler, head of safety assurance at Oxa (previously Oxbotica). “There have been some very thought-provoking talks at the conference, plus such a range of technologies at the exhibition, from simulation solutions to vehicle hardware. I thought the exhibition was genuinely great. I loved seeing the range of organizations – the big players plus the startups – all there together!”

“I’m an ADAS engineer at a vehicle truck company in Italy that is always interested in innovation. We are looking for new solutions and are very curious about what’s happening on the market and discovering the latest trends,” explained Guido Fusco, an ADAS systems engineer at Iveco. “We have been looking around to see what we can do to improve our products and to look toward the future with new solutions. I’m mostly focusing on ADAS systems and autonomous driving where the main challenges involve the sensors, perception and AI training – and there are a lot of very interesting companies here, so it’s been awesome.”

Exhibition highlights

Over 150 exhibitors specializing in ADAS and automated driving could be found across three halls at the Stuttgart Messe (which housed both ADAS & Autonomous Vehicle Technology Expo and the adjoining Automotive Testing Expo).

With established heavyweights such as Siemens, AVL, NI, Bosch, dSpace, ETAS and b-plus exhibiting alongside a fascinating array of smaller, highly specialized companies, visitors were treated to the latest developments from key players while also being able to source completely new contacts and potential solutions from an exciting mix of startups, many of which were making their first appearance at the event.

The latter included first-time exhibitor SafeAD, a startup spun out from the Karlsruhe Institute of Technology. The company leverages AI for human-like scene understanding, using data from automotive-grade full surround cameras, lidar and radar to detect and identify 3D objects and the road layout. It claims that its neural networks can also infer road elements, topology and more to create centimeter-accurate high-definition maps.

The latter included first-time exhibitor SafeAD, a startup spun out from the Karlsruhe Institute of Technology. The company leverages AI for human-like scene understanding, using data from automotive-grade full surround cameras, lidar and radar to detect and identify 3D objects and the road layout. It claims that its neural networks can also infer road elements, topology and more to create centimeter-accurate high-definition maps.

“We’re developing a 3D multisensor perception system for use in vehicles and consumer cars,” explained Dr Niels Ole Salscheider, CTO and co-founder of SafeAD. “We use a neural network to fuse the raw sensor data of cameras, lidar and radar to do object detection, tracking and prediction up to 300-400m. We can also infer local HD maps just from [camera] sensor data – in other words, you can do mapless driving in a Level 2+ system, or you can use our technology as a fallback or validation system for Level 3 or Level 4 vehicles.

“We have also developed a mapping approach that works using only automotive cameras. We use these to create a 3D reconstruction of the scene, also including dynamic objects, then we label the scene with our AI model, and then we have human annotators fix the last remaining parts. We have had a lot of interest from simulation companies who buy the scenarios [available in open scenario format] or maps and integrate them into their simulation.”

Overall, Salscheider was very pleased with how the show went: “We have really had a lot of interest, especially given that we are such a small company,” he said on the third day of the show. “All the major simulation companies have come to see our maps, and we have talked to three or four Tier 1s and OEMs, so it has been perfect for us.”

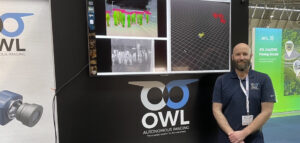

Another exciting startup making its debut in Stuttgart was Owl Autonomous Imaging, which reported strong interest in its Thermal Ranger imaging solution for use in pedestrian automatic emergency braking (PAEB) and other ADAS applications supporting L2, L2+ and L3/L4 requirements. The company’s monocular thermal camera technology enables 2D and 3D perception for object classification, 3D segmentation of objects, RGB-to-thermal fusion and highly accurate distance measurements. Owl claims that Thermal Ranger not only enables cars to see at night but can also detect how far away a living object is and what it is.

Another exciting startup making its debut in Stuttgart was Owl Autonomous Imaging, which reported strong interest in its Thermal Ranger imaging solution for use in pedestrian automatic emergency braking (PAEB) and other ADAS applications supporting L2, L2+ and L3/L4 requirements. The company’s monocular thermal camera technology enables 2D and 3D perception for object classification, 3D segmentation of objects, RGB-to-thermal fusion and highly accurate distance measurements. Owl claims that Thermal Ranger not only enables cars to see at night but can also detect how far away a living object is and what it is.

“We’re showing monocular thermal imaging, specifically for ADAS,” explained Alex Gilblom, director of sales at Owl Autonomous Imaging. “We use a single long-wave infrared thermal imager that is able to detect, segment and range pedestrians. Using AI convolutional neural networks, you can infer the distance of pedestrians using a single camera – this is a key differentiator, as normally you would need a stereo or pair of cameras, so in effect we’ve now cut in half the amount of hardware that you need.”

The new platform is well timed, given the latest rulemaking from the US Department of Transportation’s National Highway Traffic Safety Administration (NHTSA), which seeks to require automatic emergency braking and pedestrian AEB systems on nearly all passenger cars and light trucks within the next three years.

“Specifically, the new rule proposed by NHTSA says pedestrians must be detected at night and in total darkness,” Gilblom told ADAS & Autonomous Vehicle International on the first morning of the show. “We are offering a passive technology that requires no external illumination and can reliably detect a pedestrian and bring the car to a complete stop. We have had both automotive OEMs and Tier 1s approach us here in Stuttgart, asking for additional information. It’s only been a couple of hours, but we have already had some great leads.”

First-time exhibitor and startup Parallel Domain showcased its eponymous data-generation platform that enables AI developers to generate synthetic data for training and testing perception models at a scale, speed and level of control that is impossible with data collected from the real world.

First-time exhibitor and startup Parallel Domain showcased its eponymous data-generation platform that enables AI developers to generate synthetic data for training and testing perception models at a scale, speed and level of control that is impossible with data collected from the real world.

The company says the wide range of data that users can generate within the Parallel Domain platform better prepares their models for the unpredictability and variety of the physical world. The standard method of collecting and manually labeling data from the real world is prohibitively slow and costly, with developers often waiting weeks or months to obtain new data to improve their models. Even then, human labeling errors, class imbalances, edge cases and restrictions around privacy hinder ML developers from getting their systems to market.

“We’re here to show how you can use synthetic data to train your ADAS models to perform better,” explained Alan Doucet, head of sales at Parallel Domain, on the first morning of the show. “With Parallel Domain, you’re limited only by your imagination. Any outdoor scenario can be crafted using our API – you can specify the location, dictate vehicle density, set the weather and time of day, sprinkle in debris and so much more. The API harnesses generative AI and 3D simulation to create complex scenes, corner cases, specific behaviors and rare objects – anything the customer can dream of.”

As for the expo, Doucet said the company had welcomed some important visitors within an hour of the doors opening on the first day: “We’ve already had some people stop by from Porsche, so it’s been a great start!”

A wide range of simulation companies were in Stuttgart, but one new development in particular caught the eye on BeamNG’s booth, where there were live demos of an innovative driving simulator with the latest advanced auto-stereoscopic display technology from Dutch company Dimenco.

A wide range of simulation companies were in Stuttgart, but one new development in particular caught the eye on BeamNG’s booth, where there were live demos of an innovative driving simulator with the latest advanced auto-stereoscopic display technology from Dutch company Dimenco.

The BeamNG simulator shown during the expo incorporated Dimenco’s state-of-the-art PC display technology, featuring 2D/3D switchable (micro) lenses, eye tracking and an impressive 8K resolution. “We are thrilled to showcase immersive simulations utilizing this groundbreaking display technology,” said Thomas Fischer, co-founder and CEO of BeamNG. “By immersing users in a 3D world and integrating our physics simulation, we ensure an accurate representation of all ADAS features related to vehicle dynamics and physics.”

At its core, Dimenco’s technology projects two distinct images onto a screen, one for each eye, creating a sense of depth and immersion. Real-time tracking of the user’s eyes and head movements adjusts the pixels and perspective of the displayed images, aligning them with the user’s point of view. This creates the illusion that the objects on the screen exist in the user’s physical space rather than being mere projections on a flat surface. The technology is aptly referred to as ‘Simulated Reality’.

“The outcome is an impressively realistic experience that significantly benefits drivers,” explained Guillaume Gouraud, senior director of strategic partnerships at Dimenco. “We have received feedback from professional drivers who found that their skills did not translate well into traditional simulators. However, they now report that the physical realism of our simulator, combined with accurate car physics, provides an authentic experience where they have a keen sense of braking distance and spacing between vehicles in traffic.

“We believe this technology can greatly assist driver-in-the-loop simulators, which is why we are excited to be a part of this exhibition. Simulation teams from various automotive brands have expressed interest, stating that Simulated Reality offers a preferable alternative to VR headsets and surpasses traditional simulations on 2D displays. Simulated Reality is more accessible and user-friendly, allowing the user to stay connected with people in their surroundings.”

You can read more news from the show, including some exclusive exhibitor video interviews, here.

Conference insights

Alongside the exhibition, a dedicated conference featured over 120 expert speakers across three separate rooms. Dr Andreas Richter, engineering program manager of operational design domains, got Day 1 off to a great start with an exclusive insight into Volkswagen Commercial Vehicles’ approach to operational design domain (ODD) definition, and the importance of this area in achieving safe autonomous operation.

Alongside the exhibition, a dedicated conference featured over 120 expert speakers across three separate rooms. Dr Andreas Richter, engineering program manager of operational design domains, got Day 1 off to a great start with an exclusive insight into Volkswagen Commercial Vehicles’ approach to operational design domain (ODD) definition, and the importance of this area in achieving safe autonomous operation.

During his presentation, titled ‘Operational design domain between the poles of development, approval and operations’, Dr Richter emphasized how ODD definition should always tell the same story independently of how it was transformed, as well as how important it is that upcoming standards address these requirements.

“Standards are important for new approaches, especially when stakeholders with different perspectives are involved or will be involved later,” said Richter. “The ODD as a core description of AD systems will be processed by different stakeholders such as manufacturers, mobility service providers, public and approval authorities, and others.”

Volkswagen Commercial Vehicles also staged an exclusive lunchtime workshop to highlight the key challenges – and how to solve them. “We want to raise awareness that the ODD is not merely a simple sheet of paper with a few statements, but rather an important piece of the AD development and operation and should be defined with an open mind to try to address the needs of the relevant stakeholders,” continued Richter.

The first morning also saw a fascinating presentation from David Hermann, a development engineer at Porsche Engineering Services. In a presentation titled ‘Driving in the high dynamics area: a simulation environment for automated driving on racetracks’, Hermann discussed the race between automotive manufacturers to bring new technologies into vehicles, and how it is being fueled by tighter emission standards, stricter safety requirements and higher levels of driving automation. He revealed how racetrack simulation is increasingly important for Porsche, providing a safe, cost-effective and efficient means of evaluating vehicle performance.

The first morning also saw a fascinating presentation from David Hermann, a development engineer at Porsche Engineering Services. In a presentation titled ‘Driving in the high dynamics area: a simulation environment for automated driving on racetracks’, Hermann discussed the race between automotive manufacturers to bring new technologies into vehicles, and how it is being fueled by tighter emission standards, stricter safety requirements and higher levels of driving automation. He revealed how racetrack simulation is increasingly important for Porsche, providing a safe, cost-effective and efficient means of evaluating vehicle performance.

In his presentation, Hermann showcased how Porsche is using a modular simulation environment that combines a high-fidelity track environment and a full vehicle model, as well as an optimized model predictive control (MPC) that includes a method for optimizing the trajectory and speed profile to generate a realistic driving profile with best lap performances.

“Simulating a high-fidelity track environment and full vehicle model is an efficient and cost-effective approach for testing and developing automated driving,” he explained. “Developers can test and refine vehicle components and systems in a safe and controlled setting, minimizing the need for expensive and time-consuming real-world testing.”

An informative update on the ADScene initiative was provided by Dr Emmanuel Arnoux, Automated Driving Safety & Validation Working Group co-leader for the platform of the French automotive industry (PFA), on the second day of the conference.

As part of the ‘ADAS and AD homologation and validation stakes and solutions to achieve the safety challenge’ session, Dr Arnoux discussed a range of French publicly funded research projects concerned with ADAS and AD safety, safety demonstration, numerical modeling and simulation tools, as well as scenario-based automated system design, validation and homologation.

“These projects allow the French automated mobility ecosystem to build the French legal framework for automated vehicle homologation and for authorization before commercial services of automated road transportation systems,” said Dr Arnoux. “Among these tools, the scenarios library plays a key role.”

Dates for your calendar

With the 2023 European show now closed, those interested in the latest developments and innovations in ADAS and automated driving are advised to make a note of the dates for the US version of the show: ADAS & Autonomous Vehicle Technology Expo California takes place in Santa Clara, September 20-21, 2023. The European event returns to Stuttgart next summer: the dates for your diary are June 4-6, 2024.