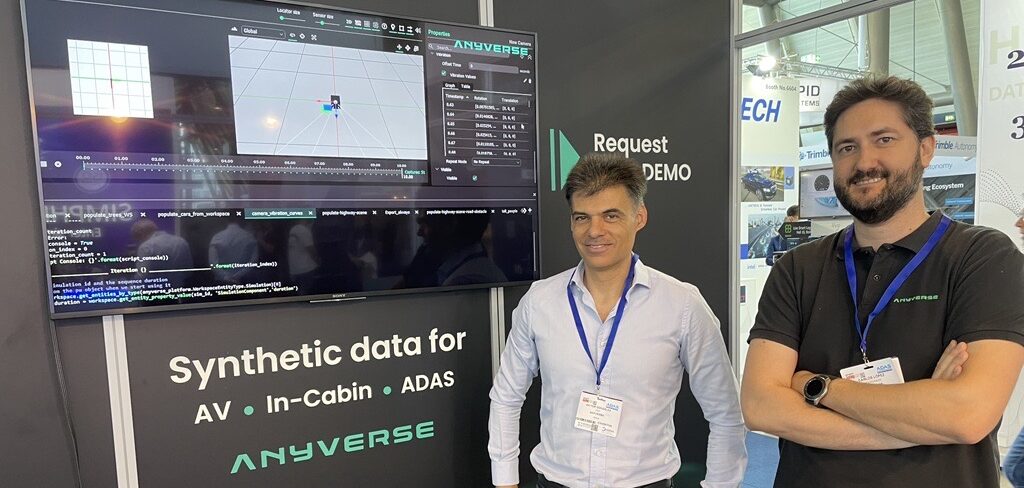

Anyverse is at ADAS & Autonomous Vehicle Technology Expo to showcase its recently launched hyperspectral synthetic data platform for in-cabin monitoring, autonomous driving and ADAS development. The company says it is capable of supplying and covering all the data needs throughout the entire development cycle, from the initial stages of design or prototyping, through training/testing, ending with the ‘fine-tuning’ of the system to maximize its capabilities and performance.

“Anyverse is not another synthetic data platform; Anyverse brings its standalone hyperspectral simulation engine, which enables advanced perception developers to physically simulate optics and sensors and generate high-fidelity data sets, reducing the domain gap and achieving higher confidence levels,” explained Víctor Gonzalez, CEO, on the first morning of the show.

Fully modular, the platform enables efficient scene definition and data set production. This starts with Anyverse Studio, its standalone graphical interface application that manages all Anyverse functions, including scenario definition, variability settings, asset behaviors, data set settings and inspection. Data is then stored in the cloud, with the Anyverse cloud engine responsible for final scene generation, simulation and rendering. It produces data sets using a distributed rendering scheme.

Along with its hyperspectral sensor simulation pipeline which features RGB HD, Near Infrared (NIR) cameras and lidar, the company says its synthetic data platform is ideal for the development of robust in-cabin and AV/ADAS systems.

“Our team is happy to show technology and platform demos to those developers and data engineers who want to see it for themselves,” continued Gonzalez. “They will love how they can accelerate the development of their AV, ADAS and in-cabin monitoring systems with pixel-accurate synthetic data that mimics exactly what their sensors see.”

Find out more at Booth 6516.