If humans need only visual input to drive cars, why shouldn’t computers? In the past, most self-driving car (SDC) technologies have relied on multiple signals, including cameras, lidar, radar and digital maps. But recently there’s been a growing belief among some scientists and companies that autonomous driving will become possible with camera input only.

At the heart of these hopes for camera-only is the continued evidence that with enough data and computing power, and the right deep learning architecture, we can push artificial intelligence systems to do things that were previously thought to be impossible.

At least one company has taken the bold approach of using only cameras for its self-driving technology. And continued research on computer vision suggests that deep learning might one day overcome some of the limits that prevent it from processing visual data as humans and animals do. But most self-driving car companies are reluctant to put full trust in image processing and continue to bank on the future of lidar and other sensory data.

Camera-only self-driving cars

At the forefront of the camera-only movement is Tesla, which didn’t believe in lidar in the first place. During a talk at the Computer Vision and Pattern Recognition (CVPR) Conference in June 2021, Tesla’s chief AI scientist, Andrej Karpathy, gave a talk on how the company is using neural networks and camera input to estimate depth, velocity and acceleration.

Tesla has several elements in its favor when it comes to creating a vision-only self-driving system. First, as the only self-driving company that has full control over the entire hardware and software stack, Tesla has unrivaled access to data for training deep learning models. It created a training data set of one million 10-second videos in different road, weather and lighting conditions thanks to the vast amounts of video and vehicle data it is collecting from the millions of cars it has sold across the world.

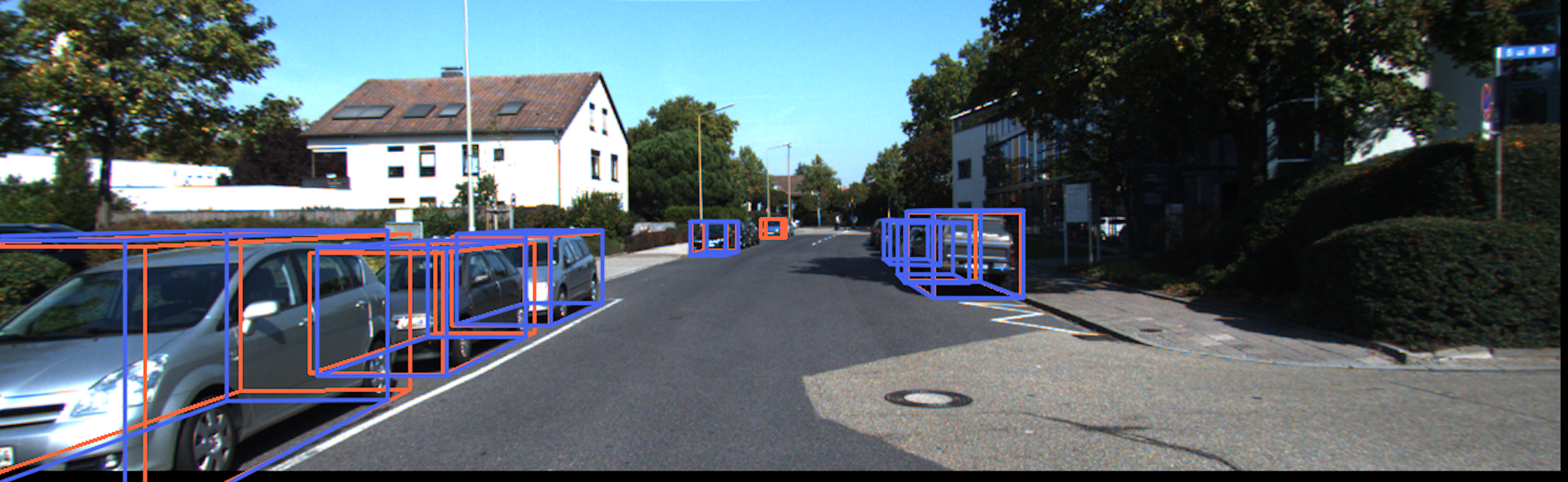

Second, it created a semi-automated labeling system that speeds up the process of annotating training examples. The Tesla team used advanced object detection neural networks to detect and annotate object bounding boxes, velocity and depth information in the training videos. The neural networks go back and forth in the video to self-correct their own predictions. A team of human engineers looked at the final results and made minor corrections. The on-car neural network is then trained on the labeled data.

This approach enabled Tesla to scale its efforts to hundreds of millions of annotated video frames with very little manual effort. And with every piece of human feedback, the auto-labeling system became better at making inferences and less reliant on manual adjustments. The process also helped engineers discover hundreds of triggers where the neural networks performed poorly and needed extra training and special adjustments. This made the neural network more resilient against the uncertainties of the real world.

And finally, Tesla used a modular deep learning architecture that combines transformers and convolutional networks to process video input from multiple cameras and perform tasks such as object detection and trajectory prediction. This advanced architecture provides more visibility and control of how the neural network is performing the subset of tasks needed for sensing the car’s environment.

During his CVPR talk, Karpathy revealed that the computer vision system had advanced to the point where the vehicle’s radar were holding things back and “starting to contribute noise”.

“We deleted the radar and are driving on vision alone in these cars,” he said.

The case for vision–only

The main argument for vision-only self-driving cars is nature. “Obviously humans drive around with vision, so our neural net is able to process visual input to understand the depth and velocity of objects around us,” Karpathy said in his CVPR talk.

Other scientists agree with this. “I personally believe that SDCs based on pure CV [computer vision] will be a reality in the future,” says Tianfu Wu, assistant professor of electrical and computer engineering at North Carolina State University.

Wu and his colleagues at NCSU recently developed a machine learning technique called MonoCon, which can extract 3D information like depth, dimensions and bounding boxes from 2D images without the need for extra signals such as lidar and depth maps. The idea behind MonoCon is to use ‘monocular contexts’ – bounding box annotations in the training data – to map pixels to 3D information such as the center of objects and their direction. The learned patterns enable the neural network to estimate cars’ locations and velocity from camera frames.

Techniques such as MonoCon can be very useful in the development of self-driving car technology that only needs camera input. According to the researchers’ experiments, MonoCon, which was accepted and presented at this year’s Association for the Advancement of Artificial Intelligence (AAAI) Conference on Artificial Intelligence, outperforms other AI programs for extracting 3D data from flat images.

The next step for Wu and his fellow researchers is to reproduce the depth-detection mechanism of

human vision.

“I think the stereo-camera setting will be one of the important components in the pure CV system/platform, like we humans have two eyes for depth perception,” he says. “With stereo images, we can develop powerful depth estimation methods that can be used to play the role of lidar.”

The researchers are currently working on integrating MonoCon with stereo settings. “It will

be interesting to see, and we will certainly explore, the potential of this type of method/paradigm for SDCs,” Wu asserts.

The limits of computer vision

Gabriel Kreiman, a professor at Harvard Medical School and associate director of MIT’s Center for Brains, Minds and Machines, also agrees that nature proves vision-only is probably enough for driving. “We use mostly our two eyes to navigate (and auditory information to a lesser degree as well),” he says.

But although Kreiman strongly believes that machines will eventually be able to do anything that humans do, he thinks we’re not there yet.

“Despite notable advances in computer vision, our visual system remains far superior to the best AI algorithms in the majority of visual tasks,” he says. In his book, Biological and Computer Vision, Kreiman highlights some of the key differences that make human vision more stable and reliable than current computer vision systems.

“Computer vision algorithms are still fragile (e.g. they are easy to fool through adversarial attacks) and struggle to extrapolate to out-of-distribution samples,” he says.

The problems Kreiman highlights are at the heart of some of the most heated discussions and areas of research in AI. Adversarial examples are small modifications that can fool deep learning systems.

In one study, researchers showed that by adding small stickers to stop signs, they could trick deep learning models into mistaking them for speed limit signs. Another study showed that stickers placed on the road itself could cause self-driving cars to veer into the incoming traffic lane.

Out-of-distribution fragility is AI’s inability to cope with data that is not included in its training examples. This is why you sometimes see self-driving cars making mistakes that any average human driver would avoid, such as driving straight into an overturned truck or crashing into fire trucks parked at odd angles.

“I never encountered a moose while driving but I think that I would have some idea of what to do. It is hard to prepare computer vision for novel scenarios,” Kreiman says, while also voicing optimism about the future. “I don’t think that this will always be so. We will be able to build computer vision systems that match and surpass human vision. This includes computer vision for autonomous vehicles.”

Some of the most promising directions of research in this regard come from two pioneers

of deep learning: Yoshua Bengio, a computer science professor at the Université de Montréal

and the scientific director of Montreal Institute for Learning Algorithms (MILA); and Yann LeCun, chief AI scientist at Meta.

Bengio is working on a new generation of deep learning systems that can integrate causality, which means they will be able to deal with scenarios beyond their training data. LeCun is exploring neural networks that can learn and reason like humans and animals, bringing them a step closer to dealing with the uncertainties of the real world.

However, other players in the self-driving car industry remain unconvinced that pure vision approaches will be enough to create safe self-driving car technology.

“It’s a bit of a lazy opinion that because humans don’t need lidar, neither should autonomous vehicles,” says Iain Whiteside, principal scientist and director of assurance at Five. “In the US, 33% of crashes are due to so-called human decision errors, a significant subset of which are speed and distance estimation errors. That is, humans not accurately judging the speed or distance of other vehicles, which leads to a collision.”

Unlike humans, lidar are very precise in estimating depth and velocity, which would reduce these kinds of collisions. Whiteside also says that even though fewer than 25% of road miles are driven at night, almost 50% of fatal accidents happen after dark.

“They aren’t all through increased alcohol intoxication rates: visibility is a factor,” he says. “Lidar happen to not care if it’s day or night. Perhaps if humans had lidar they would be better drivers.”

Multiple sensors improve safety

Whiteside believes that different sensors can complement each other. “Every sensing modality has its weaknesses. This is why almost all AV developers have diversity in their sensors,” he says. “Lidar doesn’t cope well when there is air particulate, such as rain or fog. Thankfully, other sensors may be relied on in these situations. The safety assurance of a robust AV will ensure that sensor fusion accounts for uncertainty in the sensors.”

His thoughts are echoed by Mo Elshenawy, SVP of engineering at SDV developer Cruise: “For us, the question is less about how much you need lidar – or any other sensor for that matter – and more about why wouldn’t you use it,” he says. “Cameras alone do a decent job with high-resolution colors and important visual cues but have challenges when it comes to depth information, weather and light resiliency.”

Cruise is testing its technology in San Francisco, a dense, complex urban environment where cyclists and pedestrians are always moving near the company’s self-driving cars. In such circumstances, it is vital for the self-driving cars to have a redundant system that can detect everything in all lighting and weather conditions.

“Safety is a non-negotiable principle at Cruise, and a multisensor approach that covers the spectrum of short- and long-range challenges in different conditions is an important tool for enabling that at the time being,” Elshenawy explains.

The car brain

Another argument in favor of lidar is that technology does not necessarily need to be a replication of the human brain.

“It’s true that humans drive using only ‘cameras’ (our eyes) but this doesn’t mean that a single visual input is the best way to solve the challenges of autonomous driving,” says Raquel Urtasun, founder and CEO of self-driving technology company Waabi.

Waabi is also using lidar and cameras to complement one another and reduce the risks of collisions and other accidents on roads. “At Waabi, we think of the SDV as being operated by an artificial ‘brain’ (at Waabi, we call our brain the ‘Waabi Driver’),” Urtasun says.

In organisms with nervous systems, the brain, body, sensory and motor systems have co-evolved based on their needs and environments. For instance, bats have evolved a complicated echolocation apparatus and an expanded audio cortex to be able to navigate dark places. Sea turtles have sensors that can track Earth’s magnetic fields to help them stay on course through thousands of miles of blue oceans. Likewise, to safely navigate roads, self-driving cars might need multimodal sensors and an AI system that can bring them all together.

“One of the purported virtues of autonomous vehicles is that they will improve on human performance, and I’d like to think that they will improve on humans in ways other than ‘not texting and driving’ or ‘not drinking and driving’,” Five’s Whiteside says.

As advances in AI continue to bring us closer to bridging the gap between human and computer vision, it remains to be seen if camera-only will be the best solution for self-driving cars.

“Computer vision will eventually match human vision capabilities for autonomous navigation. This does not imply that this is the way to go,” Kreiman says. “Planes fly in ways that were inspired by but are distinct from birds. Computers play chess different from humans (yet beat humans). Whether we will get to autonomous navigation faster/better/safer/cheaper through computer vision or other technologies such as lidar remains unclear.”

This feature was first published in the April 2022 edition of Autonomous Vehicle International. You can subscribe to receive future issues, for free, by filling out this simple form.

This feature was first published in the April 2022 edition of Autonomous Vehicle International. You can subscribe to receive future issues, for free, by filling out this simple form.