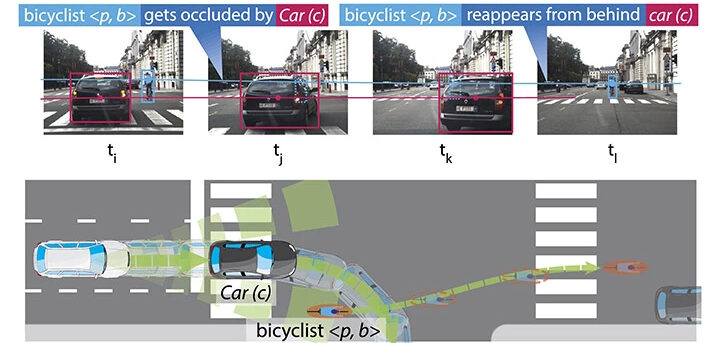

One particularly vexing problem for autonomous driving systems is how to deal with situations where sensors lose sight of other road users. For example, a cyclist dropping out of view behind a car or other obstruction.

Now, researchers at Örebro University in Sweden say they have developed an AI application that can account for such occurrences. “We have succeeded in developing a new way for self-driving vehicles to understand and explain the dynamics of our world just like people do,” said Mehul Bhatt, professor of computer science at the university.

The research findings have been presented in a study, recently published in the journal of Artificial Intelligence (AIJ), titled Commonsense visual sensemaking for autonomous driving – On generalised neurosymbolic online abduction integrating vision and semantics.

The researchers noted that in traffic, humans are used to constantly anticipating what will happen next. This reasoning ability is something that current self-driving vehicles and AI systems in general are lacking. In the study, Bhatt, together with colleagues in Germany and India, stated that combining modern neural learning with common-sense reasoning can overcome some of these pitfalls. “The developed AI method results in self-driving vehicles learning to understand the world much like humans. With understanding also comes the ability to explain decisions,” added Bhatt.

As a result, self-driving vehicles can recognize that a cyclist hidden behind a car for a few seconds still exists until it reappears. The approach enables self-driving vehicles to demonstrate a wide range of similar human-like common-sense capabilities, which have not been achievable in self-driving vehicles or other AI technologies that are based on machine learning alone. “Our method lets a self-driving vehicle understand a course of events – in this case, that visibility is blocked by a car and that after the car has passed, the cyclist will be visible again. This level of understanding is essential for self-driving vehicles to be traffic-ready under different driving conditions and environments,” continued Bhatt.

The AI method also enables autonomous vehicles to show why they have made a particular decision in traffic – such as sudden braking. Bhatt stressed, “It is of utmost importance that we do not have non-transparent technologies driving us around that no one fully understands, neither the developers of the AI, nor the manufacturer or engineers of the vehicles themselves. If self-driving cars are to share the same space as people, we need to understand how these cars are making decisions.”

This, too, is critical, not the least in studying accidents, resolving insurance issues and assisting those with special needs. “At the end of the day, standardization is crucial. We need to achieve a shared understanding of the technologies in self-driving cars – as we do with the technologies in airplanes. At the moment, we’re far from it. This will only happen if we fully understand the technologies we’re developing,” noted Bhatt.

In addition to developing AI technology, Bhatt and doctoral student Vasiliki Kondyli, also at Örebro, are studying how humans behave in traffic by allowing test subjects to drive cars in a simulator, under experimentally controlled traffic situations.

“Results from such human behavior studies are utilized to develop human-centered AI technology for self-driving vehicles that can perform at a level that meets human expectations,” explained Bhatt. “I want to contribute to the development of autonomous vehicle technologies that safely and legally take us from point A to point B while also fulfilling accessibility requirements and societal norms. Since we’d never allow a human to drive a car without a driver’s license, I think we should put at least the same demands on autonomous vehicles.”